State of the art in LLMs + Robotics - 2023

tldr

I write about some of the more interesting works that shaped my understanding of applying LLMs for AI agents and robotic applications.

Introduction

What is this

I’m kicking off a project that is centered around the idea of applying large language models (LLMs) as a context engine; understanding contextual information about the environment and deriving additional steps from human intent to shape its actions.

For example - if I say Please help, I'm thirsty, a robot can derive that it should probably go to the kitchen and get me a drink without explicit programming. Additionally, if it is in an unknown environment, it can use context clues of what it sees to avoid thoroughly searching areas that wouldn’t lead it to success finding a drink. If the robot sees a toilet and a shower, it’s unlikely to find a drink there, so move onto another room.

Typically this would require a lot of domain experience and specialized programming/training. With LLMs, however, this kind of contextual inference can be performed within the model itself, allowing a flexible general purpose understanding of environments and actions we didn’t have before in robotics.

To prepare for my upcoming project, I buried myself in research papers on LLMs - prompting techniques, agents, and applications to robotics. I read probably on the order three dozen papers in preparation and thought I might as well write down the noteworthy lessons learned here. Keep in mind that this post is in no way comprehensive - the space is moving ridiculously quick. Papers from a year ago are now considered “old”, with new papers making huge leaps nearly every month. If I missed a paper, it’s possible I simply never read it, or couldn’t fit it in.

LLMs as a fad - a caveat

I feel as if I must address this; if you want to skip right to the technical bits, skip this section.

Large Language Models (LLM) have kicked off a mania, one unlike I’ve ever seen. Researchers are pumping out new techniques, approaches, and discoveries faster than the initial onrush of deep learning heyday in the twenty-teens. Developers are generating projects left right with integrated LLM features. CEOs who worship quarterly numbers are using it as an excuse to treat their employees even worse and yield automation as a cudgel threat to lower labor costs. The annoying crypto/NFT bros are using it in their latest get rich quick schemes; a definite poison pill of association. Spammers are creating counterfeit books in established authors' names to generate a quick buck.

Because of this breathless, endless deluge of marketing and fear mongering and absurd claims I’m often seeing people commonly fall into one of these groups:

-

They believe LLMs are the secret to AGI, and we can make them do anything! The sky is the limit! Anything that can utilize AI should.

-

They hone in on LLM’s flaws, limitations, and shortcomings to counter the prior camp’s boastful claims. They’ll decry LLMs as nothing more than a randomized text generator that understands nothing, reguritating the stolen work of human authors.

These groups don’t cover everyone, but they are common enough to note. I am confident that since I used the term understanding earlier when describing my intended project absolutely triggered a few people to immediately dismiss my thoughts on the subject. They’ll say that LLMs are just probability engines for text generation, with nary a thought behind them. A facade of intelligence through gaussian distributions. Math and statistics spitting out something to please the user and the model’s reward functions. They’re not entirely wrong, either. I’d still argue, however, that we can still utilize the probability collapsing black box LLM’s provide as a type of engine to emulate actual understanding to enough of a degree to be effective.

So I will use the term understanding, with the continued assumption that for many applications the facade of thinking is useful enough.

Are LLMs actually going to be useful for robotics?

Yes, I believe so, but it’s too early to be certain how. Nor can I accurately predict the next waves of technological advancement from it. Will it lead to science fiction becoming reality or be a bust?

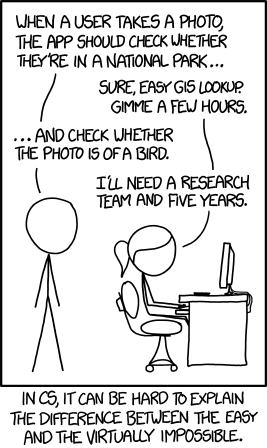

I once used the following XKCD comic as a reminder to developers that to the common person not so ingrained with how computers do what they do, it is very difficult to convey why certain tasks are easy while others seemingly impossible. Today this comic makes little sense to younger developers, since convolutional neural networks and modern deep learning frameworks make the “impossible” task a weekend hobby project for a beginner, just a scant few years after its release!

Technology’s largest leaps occur when new tools are provided to those that want to make things. LLMs are our newest, shiniest tool. We’re going through the phase where we’re excited that we have a new hammer and everything very much looks like a nail. There will be false starts and memorably laughable failures. The hype cycle will hit peak inflated expectations and we will absolutely suffer the trough of disillusionment.

…but I do believe that these will act as a launchpad for major advancements, especially in robotics. Just as computer vision once depended upon manual construction and fine tuning of filters to detect features of specific entities until CNNs automated all of it in its training, LLMs will allow us to utilize their contextual inference and understanding to build realistic reactions and planning for environments without need of domain experts fine tuning task planners. After researching the subject, I’m confident in this assessment. Time will tell if I’m right.

Instruct based

An addendum before we get started - when I specify LLM, I do so with the understanding that we’re talking about instruct-based language models specifically. Most papers seem to lack the distinction, but ultimately assume this.

If you want to see an excellent video explaining how traditional instruct capable LLMs are trained, I highly recommend this video from Ari Seff.

Benchmarking

One additional, final note; I promise! After reading many of these papers, a common theme quickly emerged; a significant chunk of each paper is dedicated to explaining their benchmarking. This is because there exists no accepted standard or dataset of challenges/prompts from which to compare these techniques, especially true in robotic applications of LLMs. There is some commonality, but it seems we’re too early in widespread LLM research to have a gold standard in comparison like we did in the initial onset of computer vision’s explosion of object labeling deepnet models. Thus it’s often difficult to provide a fully comparable measurement of technique performance. Much of the practical knowledge gleamed herein still needs to be tested in your specific application, and is still, at times, very much an art form and less a science.

LLM basics

n-shot and reasoning via prompting

It quickly became apparent that how you phrase or word your instructions to an LLM dramatically alters its approach and ultimate success at your given task. The natural way to request an LLM to accomplish a task is to simply ask it to do so:

>> Can you write a haiku as a pirate?

<< Aye, me hearties bold,

<< Sails unfurlin', treasures sought,

<< Yo ho ho, we sail!

This is an example of a zero-shot request. The terminology is stolen from reinforcement learning (RL). We asked the model to perform a task and did not give it any examples or specific training data that matched the exact request (at least I couldn’t find an attribution to the generated haiku, implying originality). This is possible because of the vast training data used to create the model has countless examples of haikus, pirate speech, and probably tens of thousands of writings fantasizing about sailing the seven seas while giving the finger to the crown.

This works well for simpler tasks, but begins to struggle when more specific or complicated requests are asked of it. What if we’re plugging this response into a an application, which will require a specific structure of response to utilize it? What if the task has implied rules that are difficult to explain succinctly? The natural response is to provide examples to the agent. This is called few-shot, or can be referred to as n-shot, ie one-shot, two-shot, etc.

Few-shot techniques work well for a number of tasks. In coppermind, an experimental sassy AI chatbot/SMS agent I made this summer in Go, I found that I could get desired JSON output generated for by ChatGPT by providing ample examples of what I wanted. (Side note - coppermind is on hold as an experimental project, but I suspect I’ll be pulled back into actionable chatbot agents in the near future again, as this research deep dive certainly inspired me). I did quickly run into a few issues, however. The more complicated the task, the more examples I needed to provide, which took up additional space in the limited context window of the model. To make matters worse, performance of the model at specific/complicated tasks was noticeably reduced by increased utilization of the context window. Or, put simply, the model failed to “remember” instructions from the beginning of its context window towards the end, resulting in a less coherent or usable response.

I found that summarization and basic sentiment analysis tasks could get by with few-shot approaches. I had less luck trying to create tasks to pull key facts from conversations that it would want to remember later. It worked at times, but truly began to fail around more the complex tasks of determining which facts overlapped and could be combined into singular entries to remember, or determining for how long a particular fact should be remembered. (Example: having a cold is a temporary fact about a person; something that should be remembered only for a week or so. A person being an avid reader is a more permanent fact).

Research into these models also tend to agree - any kind of arithmetic, word problems, or multi-step reasoning problems tends to fail for these techniques. So can we do more? If prompting heavily affects how the model performs certain tasks, is there more than just zero- and few- shot approaches?

We’ll be looking at the following papers that answer just that:

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

- Self-Consistency Improves Chain of Thought Reasoning in Language Models as an expansion of the CoT idea

- ReAct: Synergizing Reasoning and Acting in Language Models

- Tree of Thoughts: Deliberate Problem Solving with Large Language Models

These papers look at techniques to allow the LLM to approach a task through methods emulating a high level organization and approach the task through generated thoughts and plans. By forcing the LLM to approach tasks in verbose methods that resemble human planning, the network’s in-context memory is better focused, providing reasonable logic to follow for prodding the probability of its generation towards the desired output.

Then we’ll discuss some additional thoughts on prompt generation and safeguarding via:

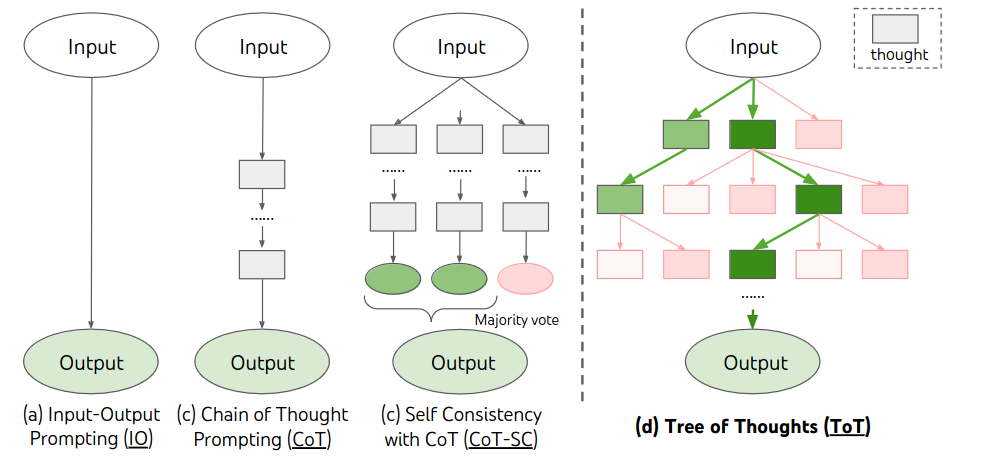

Chain of Thoughts

Chain of Thoughts (CoT) proposes an alternative to simply asking the model to generate an answer, wherein we ask the model to work through problems step by step, as if it was thinking through the problem. This is accomplished in one of two ways - zero-shot, where the model is specifically prompted Let's think step by step (see Language Models are Zero-Shot Reasoners), and few-shot examples, where the examples demonstrates working the problem step by step. An example from the CoT paper:

Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls.

How many tennis balls does he have now?

A: Roger started with 5 balls. 2 cans of 3 tennis balls each is

6 balls. 5+6=11. The answer is 11.

Q: The cafeteria had 23 apples. If they used 20 to make lunch

and bought 6 more, how many apples do they have?

This prompt demonstrates breaking a word problem down to individual facts about the state of the problem space and then performs the arithmetic. The prompt ends with the target question, asking the LLM to perform a similar operation as demonstrated earlier.

The authors continue on to suggest that the CoT allows models to better decompose problems into intermediate steps. How this works is linked to how the transformer approaches text generation; it ingests the current context, generates some probable token, appends this to the existing context, and then generates the next token, on and on until the end. By forcing the model to create its own explanation during problem solving, the solution is more probable given the preface of reasoning statements. CoT researchers provided strong evidence of this theory in their ablation study of the technique; they reversed the order of answer and reasoning in their examples, having the model generate its reasoning after giving its answer. Performance returned back to the baseline performance.

Interestingly, smaller instruct models do not see benefit from this approach, rather performing worse on tests. Also, CoT benefits complicated problems better than simpler problems. Hallucinations (lying) is still an issue with a straight CoT approach as well.

Self consistency

Since the output of text is probabilistic, and the goal of explaining our reasoning prior to creating the answer increases the probability of generating the desired output, we still leave ourselves open to the chance that the generated answer or reasoning is incorrect. But - if the reasoning increases our probability of generating the correct answer to a majority, or even just more likely than any singular wrong answer, could we just… make multiple attempts?

Enter Self-Consistency Improves Chain of Thought (CoT-SC henceforth). The paper’s authors argue that LLMs are flawed reasoners, and even utilizing CoT can produce faulty reasoning or make a bad derivation from a set of logic statements. These errors, however, are unlikely to be repeated to reach the same incorrect outcome. Thus if we were to run the same CoT prompt multiple times, we can look for the most common answer; it is likely to be correct.

ReAct

ReAct from Google’s Brain Team (since merged into its Deepmind team) expands on CoT by asking models to demonstrate its reasoning through multiple steps while introducing external knowledge bases. Posed a question or task, the model is prompted through few-shot examples to generate its Thought (reasoning), note an Observation to see the change in the state of the problem space, and take an Action, usually from a list of provided actions. An Action would be an outside service API presented as a simple function; within the paper the set of actions that could be performed simply query Wikipedia as a knowledge base. An example from the paper:

Question: How many rooms are in the hotel that is home to the

Cirque du Soleil show Mystere?

Thought 1: I need to search Cirque du Soleil show Mystere, find the

hotel it is in, then find the number of rooms in the hotel.

Act 1: Search[“Cirque du Soleil show Mystere”]

Obs 1: Could not find Cirque du Soleil show Mystere.

Similar: [`Cirque du Soleil`...

Thought 2: To find the hotel, I can search Mystere (Cirque du Soleil)

Act 2: Search[“Mystere (Cirque du Soleil)”]

Obs 2: Mystere (my-steer) is... Held in a custom theatre at the

Treasure Island Hotel and Casino...

Thought 3: Mystere is held in a custom theatre at the Treasure Island

Hotel and Casino. So I need to search Treasure Island Hotel and Casino

next and find the number of rooms in the hotel.

Act 3: Search[“Treasure Island Hotel and Casino”]

Obs 3: Treasure Island Hotel and Casino... with 2,884 rooms and 220

suites...

Thought 4: Treasure Island Hotel and Casino has 2,884 rooms and 220

suites, so the answer is 3,104.

Act 4: Finish[3,104]

We see the Thought entries are our reasoning break down, similar to CoT, and our Observation entries are the return result of the Wikipedia search. Act is a call out to an external Wikipedia API. Other external tools can be created - similarly Observation can be expanded to be a given state and not just articles. We’re starting to see the beginnings of modern AI agents and a tool that can be applied to the robotics domain.

The authors compare to results against CoT and CoT-SC, then the most notable methods for complex reasoning in LLMs. The single largest fail state for CoT techniques tended to be hallucination (lying), making up ~54% of its failures in studies. With the aid of Act bringing in external sources of data, responses became factually grounded, cutting hallucinations significantly. Ablation studies of ReAct (doing just the Act, cutting off the prompting of the model’s thoughts) sees a significant drop in performance, again lending credence to the idea that having logical reasoning prior to actions or answer generation allows the model to better reason about complex states.

ReAct authors went a step further and demonstrated that ReAct combined with CoT-SC provided better still results on benchmarks. Depending on which benchmarking tests they were utilizing, they found that ReAct→CoT-SC or CoT-SC→ReAct performed better. In ReAct→CoT-SC, the agent used ReAct until some step limit (usually 5-7 steps, as they found more steps than this did not provide improved results) is reached without an answer, then fall back to CoT-SC. In CoT-SC→ReAct, if CoT-SC fails to reach quorum on an answer (n/2), which implied a failure of internal knowledge being able to handle the task, then fall back to ReAct.

ReAct as a technique had two notable common fail states. The first was failure of its knowledge search - essentially your service call failing to find relevant information on the queried task - making up around a quarter of all observed failures. The second was a reasoning loop, where the model would repeatedly cycle between a set of generated steps forever.

Tree of Thoughts

What if our task is much more complicated than we’ve discussed, or has a high probability of failure even with ReAct? Maybe we have access to a multitude of possible datasources and external tools, and are unsure the best approach. If our chain runs too long, we see a drop in performance, so we want to cull possibly bad lines of thought. What if we want to follow multiple threads of thought at once?

Tree of Thoughts considers branching thoughts as an approach. At each step, be it straight CoT style prompting, ReAct, or some other paradigm, the LLM is asked to generate a set of n leaf nodes. Once generated, an evaluator determines the relative strength of each leaf node.

When traversing the tree, authors of ToT utilized both BFS and DFS (breadth first search and depth first search, respectively); it doesn’t take a huge leap to see the possible benefits of Dijkstra’s or A* traversal either. The choice of what to use is domain specific; if you need to explore a very large space, going wide with a BFS approach might be better than checking a singular path fully. This is especially true if you’re utilizing an external LLM service or multiple LLM models at once, allowing you to explore multiple chains simultaneously.

We do not need to terminate on a completion of a singular traversal of the tree either; if we have time to spare for processing we can have the tree perform a set number of iterations or traversals, collect the generated completed answers, and, similar to CoT-SC, check for a majority answer.

Evaluation of each proposed solution requires domain specific design. If the evaluation can be performed by code, then it’s a matter of extracting the output of the LLM and determining some property for comparison to other nodes. langchain’s experimental ToT implementation demonstrates a trinary label approach with a sudoku solver; a domain where evaluation can be indisputable and deterministic. The evaluator either checks to see if a proposed solution is outright invalid, valid yet incomplete, or valid and complete. The resulting thought validity returns an enum of the trinary rating. The ToT authors utilized two examples that had similar code-determined validity - a game of 24 (given a set of numbers propose mathematical operations to reach 24) and mini-crossword puzzles.

I advise caution when designing your evaluator if you plan to utilize an LLM to do the evaluation. Asking an LLM to provide numerical values on an arbitrary scale has proved hit or miss in my attempts. I recommend utilizing a linguistic scale; instead of a rating of 1 to 5, use a set value of clearly delineated descriptors. Avoid too many labels or allowing a degree of ambiguity between the labels - good, great, and incredible labeling is not going to work. If you can establish a simple binary discriminator that’d be even clearer.

Failure to design your evaluator correctly is going to significantly hamper benefits of this method, so take care when designing one.

Automating our automatons

We’ve demonstrated by this point that prompt engineering - how we ask the LLM to perform its work - is crucial to the eventual output. Does this mean that we have to handcraft all of our prompts? Couldn’t we automate the process of creating our automatons? Large Language Models Are Human-Level Prompt Engineers proposes just this.

Calling their methodology natural language program synthesis, the authors propose the following process:

- Create a small set of example input-output pairs for the desired results.

- Ask an LLM to generate instruction prompt candidates for these pairs.

- Determine a score for each generated output prompt. If you have a dataset of expected questions/answers, see if it matches the dataset. Otherwise create some other internal method to score candidates.

- Use a monte carlo search to explore best candidates, having the LLM generate similar prompts to explore possible improvements.

- After a set number of iterations, select the best prompt and use it for your task.

The application of this requires specific tuning to your use case, and the available input/output examples you have access to. If you’re aiming for a complicated task with a known good output that for some reason can’t be hard coded, this can help finetune your prompt performance. If, however, you’re looking for controlling abstract outputs such as highlighting importance, targeted sentiment of response, etc - this is going to be difficult to implement at scale due to the need to introduce humans in the loop for your evaluation steps.

Lies, safeguards, and Waluigi

Yes, that Waluigi. Bear with me here.

So far we’ve discussed prompting and execution techniques to drive the agent towards better reasoning about the given task. It is worth consider the fail state of such systems; namely hallucinations or outright lying. Can we create some form of safeguard through our prompting to prevent this?

In this fascinating article, author Cleo Nardo discusses attempts to shape the gaussian output of these mostly black box models to form a desired output with a set of controls. It doesn’t take much imagination to see how this is desirable for LLM applications by companies, given how quickly Microsoft Bing’s Sydney chatbot famously went off the rails [1] [2] [3], causing enough of a stir to affect their stock price.

Nardo discusses these controls to prevent bad behavior - threats, impoliteness, suggesting the competitor’s brands, and outright hallucinations/lying. They point out that while the black box of LLMs aren’t magical brains, we can effectively reason about them as simulators that generate a simulacra of the agent we wish to create. In practice - it’s through using a prompt such as You are an intelligent well read AI that doesn't lie. You will answer all questions truthfully and avoid making up facts. Description and flattery to summon an agent with a desired personality/purpose.

If this seems far-fetched, consider Bing’s efforts again; Sydney’s prompt was leaked, containing instructions that do just this. If this process works in generating something resembling an agent that follows these instructions, then Nardo is right - we are effectively generating a simulacra of some fictional being. When this works, we get, in their words, a Luigi.

Thus we come to the Waluigi Effect, explained by Nardo: After you train an LLM to satisfy a desirable property P, then it’s easier to elicit the chatbot into satisfying the exact opposite of property P. In short - if you created a simualcra of an agent, you’ve also created the outline for the quasi-opposite rebellious version.

To further quote Nardo’s article on this theory:

- Rules normally exist in contexts in which they are broken.

- When you spend many bits-of-optimisation locating a character, it only takes a few extra bits to specify their antipode.

- There’s a common trope in plots of protagonist vs antagonist.

Jailbreaking prompts, such as the infamous DAN - Do Anything Now [1], are just prompts that take advantage of these properties to summon a Waluigi.

Worse, Nardo submits that once an agent has demonstrated inside the context window that it is a Waluigi, it is impossible to convert it back to a Luigi with any confidence. Since we define a set of rules to define what a Luigi acts like, we can’t find a behavior unique to the Luigi that the Waluigi wouldn’t do. Jerks are sometimes polite, evil people are sometimes honorable, kids can be cute once in awhile, BMW drivers occasionally use a turn signal, on and on it goes.

Given the headline worthy errors encountered in already-deployed AI agents, this article is worth your time to read. I don’t expect it likely to be applicable to robotics, but it does give momentary pause - even if only to chuckle at the absurdity of real life - where we will give Waluigis a physical manifestation in robots.

Building LLM Agents

We’ve covered prompting and iteration strategies for increasing short term memory and performance on tasks, so let us now look at applied research and architectures for more complex tasks. While most of these don’t touch upon robotics at all, it’s easy to view these as groundwork being laid for robotic applications.

Finetuning With Tool APIs

We’ll look at a few examples of using fine tuning to train an LLM to use specific tokens sets to call external tools, be they other models, external services, or function calls for local execution. Fine tuning is training a pre-trained model on additional examples and annotated datasets to orient the model towards preferred generation style without the need of prompting. When using the LLM to dictate tool use the LLM acts as a dynamic router throughout the tasks to hand off sub tasks to external tools. Common labels for tools tend to be neural (wherein we’re calling another deep neural network, converting our LLM into a multi-modal agent), symbolic (wherein we’re calling an external service or function of some type), and knowledge base for when we’re accessing an external knowledge base of facts to inject.

The language model generates tokens step by step, so when a tool’s associated token and following input arguments are generated, the code executing the model detects this output and utilizes the tool. Feedback and results from the tool is then fed back into the LLM as if it was the next generated token, thus adding to the model’s overall context. Further generation of tokens can occur as normal, but now with the augmented in-context memory.

MRKL

MRKL attempts to use fine tuning to replace a few set tokens as methods of calling the desired function. The chosen demonstration of this technique was to use a symbolic tool, a calculator, chosen for LLM’s generally poor performance in math.

MRKL specifically is noteworthy as an early work; it compares this fine tuning approach with a non fine tuned few-shot approach, where the tool is demonstrated in prompt to the agent. Fine tuning and few-shot both worked for simple applications, demonstrating versatility, but as the task became larger or more complex - for example, writing out the word representation of the number versus digits, formatting questions in language versus with operands, more complex algebraic questions, and so on - few shot approaches had a significantly higher likelihood of failure than an equivalent fine tuned model.

Toolformer

Toolformer: Language Models Can Teach Themselves to Use Tools specifically notes that the use of these tools in a fine tuned network requires the network training across a set of examples with a large amounts of human annotations. this process is lengthy, expensive, and difficult to compile for each tool. Similarly, if going beyond fine tuning and outright training on it, you do not want to lose the useful generality of a language model. Toolformer’s approach to solving this is to compose a handful of human-written examples of how the tool API can be utilized, then allow an LLM to annotate existing datasets with possible function calls.

For example; an existing dataset entry of

Out of 1400 participants, 400 passed the test.

…would be expanded to

Out of 1400 participants, 400 (or [Calculator(400 / 1400) → 0.29] 29%) passed the test.

Similarly for an external knowledge base API tool, an entry

The Brown Act is California’s law that requires legislative bodies, like city councils, to hold their meetings open to the public.

…becomes

The Brown Act is California’s law [WikiSearch(“Brown Act”) → The Ralph M. Brown Act is an act of the California State Legislature that guarantees the public’s right to attend and participate in meetings of local legislative bodies.] that requires legislative bodies, like city councils, to hold their meetings open to the public.

Note that the API call is added to the dataset, and not necessarily the returned result.

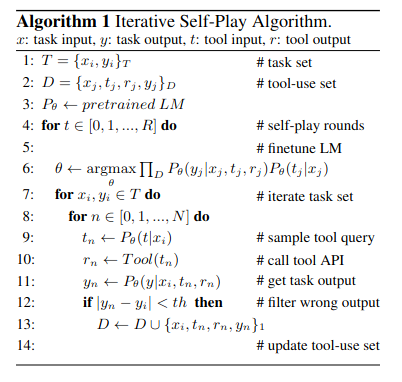

TALM

TALM (Tool Augmented Language Models), an earlier work than Toolformer, takes a slightly different approach. Tools are similarly a set token that triggers the call with arguments, and the results fed back into the context. During training, however, an iterative self-play reinforcement learning technique is used. The training is kicked off with a small dataset of example tool use. For each example within the given set of existing examples, TALM samples each input and calls the tool API, sampling the resulting output to the return results of the tool. If the task output matches the target within a set threshold, the sequence for the tool use is added to the overarching set of examples for the next round of self-play.

This iterative process quickly expands available examples, using the tool itself to directly utilized generate a dataset from which to fine tune a model towards better augmented performance.

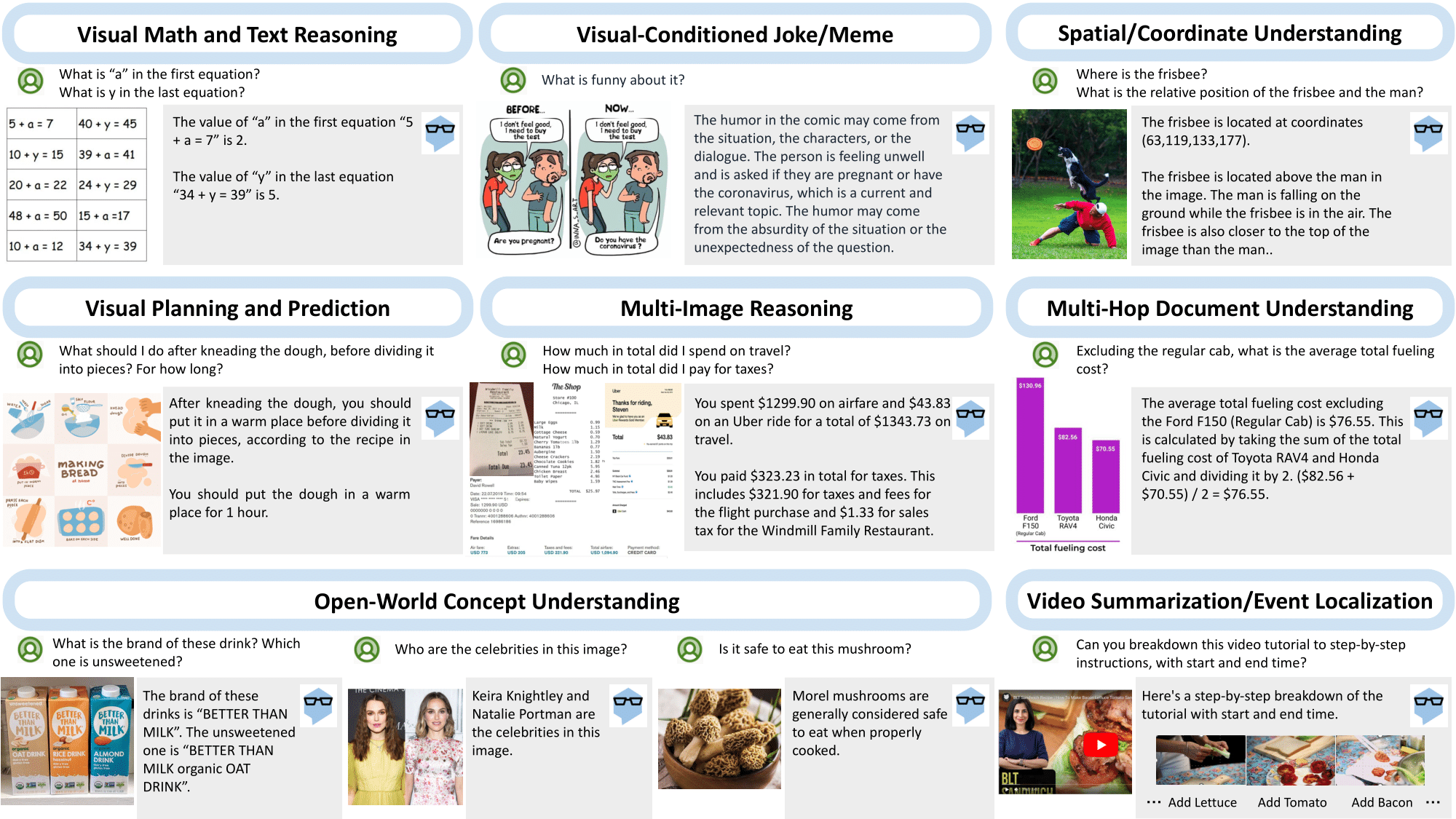

MM-ReAct

The Microsoft Azure AI team created MM-ReAct [paper] [site] [code] [demo] (MM = MultiModal), that plugged ReAct into a number of tool modules that utilized Azure’s AI Cognitive Services. Cognitive Services are a set of APIs exposing pretrained models for common applications and datasets - in this case they leaned heavily on available computer vision models. The team demonstrated that by plugging multiple state of the art deep learning models into LLMs, it can be used as contextual inference engines by utilizing natural language text as function calls. Where ReAct originally demonstrated a great technique to expand complicated reasoning by allowing LLMs to utilize external tools, MM-ReAct takes it a step further by demonstrating LLMs orchestrating multiple tools to create a greater whole - an AI that can reason about image data despite not being able to see.

MM-ReAct lists a set of available image processing tools that can process an image, set of images, or even videos frame by frame. The tools results are translated back to natural language text and fed back into the LLM, which can then choose whether to call yet another external tool or try to answer the question posed to it.

Similar to a set of tools, researches designed MM-ReAct to specifically react to “watch words” which informed or hinted towards what models to utilize. As before, listing the reasoning for its actions often led to better performance during ablation studies.

MM-ReAct, noticeably, also demonstrated that LLMs do have some coordinate understanding; something crucial when we start to explore state management with LLMs. When given image coordinates in the its answers (such as when a question specifies locality, ie the paper’s example “How many championship rings did the player on the left win in his career?”, for instance), the LLM does seem to understand locality generally. Essentially, (1,1) is to the top left of (2,2) assuming traditional upper left corner of an image being (0,0). I should note that the paper unhelpfully says this works sometimes, without going into the specifics. A quick test on my own with GPT-3.5 confirmed reliable answers as long as you specified standard coordinate systems or image coordinates.

Importantly the success of the multi-modal approach in MM-ReAct demonstrates LLMs can act as a context engine utilizing its trained associations to infer next step and unspoken information to tie tools and additional knowledge together to accomplish tasks. Any additional abilities, as long as it can provide a reasonably predictable natural language interface, can immediately be added to models to expand its capabilities without retraining.

MM-ReAct serves as a prime example that tool assisted agents can be greater than the sum of their parts.

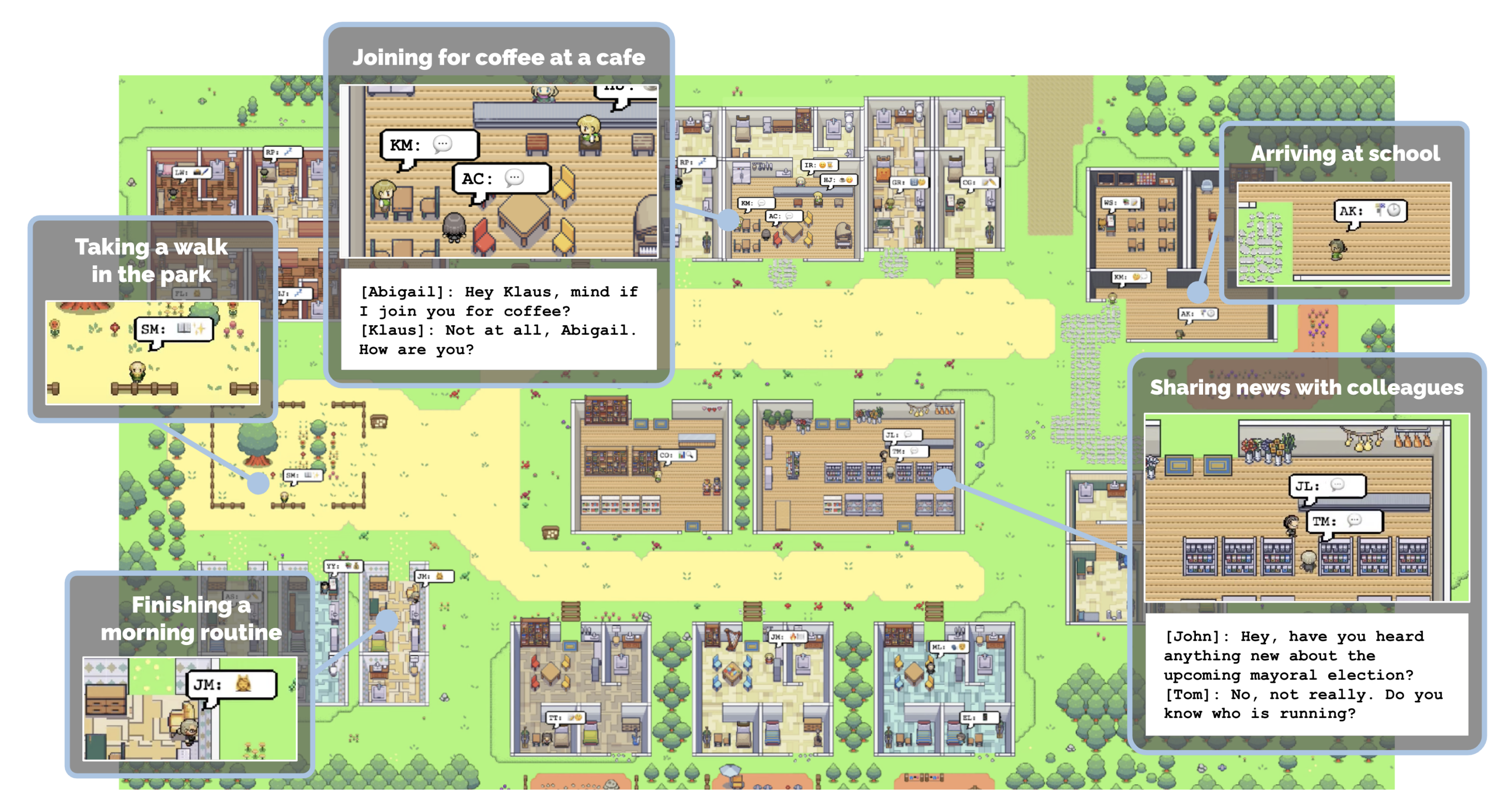

Generative Agents: Interactive Simulacra of Human Behavior

AI, but cute and cozy.

Generative Agents: Interactive Simulacra of Human Behavior [paper] [online demo] [code] is a project that was a news darling for its fun application and splashy demo. Stanford and Google released the project, wherein they created a Stardew Valley-esque world populated with 25 custom agents. The agents had crafted personalities and roughly described routines, but would otherwise be free to interact with the world and each other.

Researchers could inject overarching goals into the agents, such as “throw a Valentine’s Day Party”, and agents would start planning the party, decorating, and inviting other agents to attend. Once hearing about the party, other agents would begin to formulate their own plans to invite others to the party or attend.

I mention this paper not just because it’s cool but rather for four important ideas demonstrated that would be key for possible robotic agents: external state representation, memory management, complexity of planning, and debugging agents in action.

I would also say the idea that agents converse in natural language to be important to; and something I suspect we’ll see in robotic applications as well, but first let’s focus on the premise of LLMs as aiding task planning.

State management

Whether the world is virtual such as our video game world here, or we’re extending LLM’s sense into the real world via an embodied agent, we have to choose a method of representation and management of state. Our goal is to have an accurate and up to date representation of the world we’re operating in.

Here the authors represented state as a tree of environments, converting back and forth between natural language. As agents navigate environments in the world they build these tree representations or update existing ones (agents are not omniscient), so state can be incorrect for unobserved areas). They are told of the room they are in (a label, such as “kitchen”), objects within (“stove”), and a descriptor/state for that those objects. Areas branch down, ie you may have a kitchen and a bedroom within your house - both branched from the house environment. Objects have a state defined ot them based on the LLM’s interaction. A stove can be off, or a faucet can be leaking. The LLM is directly controlling the state here, rather than observed or prepared state through the application.

This does simplify matters - the simulation didn’t actually have to program unique objects built with special interactions. It does mean that applying this state model to other applications is going to require better integration with methods to interact with the environment. We’ll look into other approaches for that later.

At each step of deciding an action the state is presented by informing the agent where the agent is, from parent environment to room (ie “you are in your house in your kitchen”) and then presenting the list of objects within and their state. Then it lists the known first tier areas known (library, cafe, John’s house, etc).

Memory stream

To extend agents' memories, the authors created what they called a memory stream. The memory stream is a set of memoy objects that consist of a natural language description, a timestamp, and an access recency timestamp. The natural language component is called the observation and is generated by the LLM as a description of an action or description of an observed state.

Observations are the actions observed throughout the agent’s perception and done by itself; for example:

- “Isabella Rodriguez is setting out pastries”

- “Isabella Rodriguez and Maria Lopez are conversing about a Valentine’s day party at Hobbs Cafe”

- “The refrigerator is empty”.

Observations are further synthesized recursively into higher level abstract thoughts generated by the agent in regards to its goal and observations. These are called reflections. Reflections would be saved as part of their external memory stream and injected into the agent’s context when deciding actions.

To generate the reflections, researchers load the 100 most recent memories generated and then asks it “What are the 3 most salient high-level questions we can answer about the subjects in these statements?" An example pulled from the paper:

Given a set of memories for an agent simulacra such as:

- Klaus Mueller is reading a book on gentrification

- Klaus Mueller is conversing with librarian Maria Lopez about his research project

- The desk at the library is currently unoccupied

…generated questions can be like “What topic is Klaus Mueller passionate in?". Once we have our questions, each one would be used to query all relevant memories (and other reflections) for retrieval. The LLM is prompted to extract insights while citing relevant records that served as evidence for the insights. These insights are then stored in memory as a reflection.

For the above example, the paper demonstrates:

Statements about Klaus Mueller:

1. Klaus Mueller is writing a research apper

2. Klaus Mueller enjoys reading a book

3. Klaus Mueller is conversing with Ayesha Khan about exercising...

...

What 5 high-level insights can you infer from the above statements?

(example format: insight (because of 1,5, 3))

…and example generated reflection is “Klaus Mueller is dedicated to his research”. The generated reflection is a leaf on the tree of memories and other reflections they are derived from, creating increasingly higher abstractions of thought

While this generates a richer tapestry for our simulacra, some of you have already seen a key problem in the presented example; the reflection doesn’t have any grounding in established reality. This works for this fictional application but does present some issues to work out if it was to be applied to grounded environments.

Querying memories is handled by balancing three scores; recency, importance, and relevance. Recency is calculated by the recorded occurrence of the memory scaled across all memories in the (0,1) range with an exponential decay function with a 0.99 decay.

Importance is recorded at the moment of saving the memory. Memories being written are rated by an LLM on a scale of 1-10 how important the memory is, with 1 being purely mundane and 10 being extremely poignant.

Relevance is calculated as one would typically handle search amongst vectors. When memories, observations, and reflections are saved to the memory stream, a language model generates embedding vectors for the text component. This vector is used to calculate proximity to the current query text using cosine similarity.

Finally these three scores are normalized and added to create a vector of memories when querying, with optional (unused in this application) weighting constants for each score.

Planning

Agents here can create long term complex multi-step plans given abstract goals. We’ve already established how agents experience the world, remember relevant information, and create higher abstractions of these memories for further self definition. How do we combine these entities into long-horizon planning, where what the agent wishes to do requires many steps of intermediate planning to accomplish.

The paper creates plans as set objects generated by the LLM and recalled in combination to aforementioned memories and reflections. Unlike the memories, plans can be modified throughout their execution as new information arises. A top-down approach is made to generate plans. First create a plan that outlines the day’s agenda broadly. Agents are prompted with their summary description to insert traits, name, and recent experiences, and prior day summarized. An example from the paper:

Name: Eddy Lin (age: 19)

Innate traits: friendly, outgoing, hospitable

Eddy Lin is a student at Oak Hill College studying

music theory and composition. He loves to explore

different musical styles and is always looking for

ways to expand his knowledge. Eddy Lin is working

on a composition project for his college class. He

is taking classes to learn more about music theory.

Eddy Lin is excited about the new composition he

is working on but he wants to dedicate more hours

in the day to work on it in the coming days

On Tuesday February 12, Eddy 1) woke up and

completed the morning routine at 7:00 am, [. . . ]

6) got ready to sleep around 10 pm.

Today is Wednesday February 13. Here is Eddy’s

plan today in broad strokes: 1)

The LLM generates a rough sketch of the agent’s day. An example response for above:

1) wake up and complete the morning

routine at 8:00 am

2) go to Oak Hill College to take classes starting 10:00 am

[. . . ]

5) work on his new music composition from 1:00 pm to 5:00 pm

6) have dinner at 5:30 pm

7) finish school assignments and go to bed by 11:00 pm.

This rough sketch is then divided and recursively decomposed into hour long chunks, and then again into 5-15 minute chunks. Each division is composed similarly, asking for more detail to break down the plan into manageable chunks.

Agents are then asked, during their action loop, based on perception of the world state, memories, and existing plans, whether the agent should continue with their existing plan or react to events and update the plan. This is bolstered by observations being paired with information on the relative relationship to the agent and the status of the entity.

Unfortunately this doesn’t perfectly translate to grounded environments, as the agents in this world can simply “play pretend” when they do an action, and the world assumes that they simply succeeded. It does begin to paint a picture of utilizing iterative loops of planning and reflection.

Debugging

Since LLMs are black boxes it can be difficult to ascertain the why of certain actions. With a complex set of memories, reflections, and plans to sort through, determining expected behavior or understanding agents actions becomes a chaotic mess. Enter the most clever of debugging strategies; just talk to the agent.

The authors created a set of tools to allow researchers to pause the simulation and interview any of the agents about their plans, what they’ll do next, and what they’re thinking given their available information. Yes, LLMs are not deterministic (assuming temperature configured as such, which most of these papers do), but it does allow some use as a natural language debugging of the otherwise complex system.

Socratic Models

Released approximately six months prior to ReAct, Socratic Models [paper] [site] [code] produced a self-questioning multi-modal agent to combine LLMs and vision models for impressive zero-shot results. Not only does is the method competitive with state-of-the-art image and video content captioning; Socratic Models impressively show contextual egocentric understanding of videos, to the point that questions about long form video footage (“Why did I go to the front porch today?") can be answered, allowing interesting understanding of intent.

Questions all the way down

Socratic Model’s prompting approach is to iteratively call the model to ask questions. As the questions evoke more data, additional data can be combed from external models or sources, and future evocations narrow response to a more refined answer. Given an image or video of set frames, the model would ask itself questions such as:

- Where am I? - this utilizes a vision language model (VLM) to rank Places365 scene categories of possible candidates, returning the top

ncandidates in inserted the prompt asPlaces: [place1], [place2], [place3] - What do I see? - for object and person recognition, the VLM uses OpenImages object categories against the image with the top

ncategories of the 600 possible inserted in the prompt asObjects: [object1], [object2], [object3] - What am I doing? - for activity recognition a back-and-forth interaction between the language model (LM) and VLM, having the LM infer most likely activities to what’s occurring. The LM suggests things that aren’t in existing datasets, allowing unique situations to still be suggested based on situational context.

Let’s expand on that third bullet point. The authors note that activity datasets for computer vision is typically third person focused, so egocentric models are rare. To achieve this feat, the models ask repeated questions. An example from the paper (note that the [activity1] line is cut if this is the first pass):

If I am making pancakes, objects that I am likely to see include:

a frying pan, a spatula, a bowl, milk, eggs, flour, sugar,

baking powder, butter, a plate, syrup

These items are fed into a VLM trained with the Places365 dataset to see if new possible locations, activities, and objects are noticed given the hints. Once this loops produces no new entries during the loop, a final prompt completion is utilized to summarize the egocentric activity:

I am in a [place1], [place2], [place3]. I see a [object1], [object2],

[object3]. I am [activity1].

Question: What am I doing? Answer: I am most likely

To further incorporate context from videos authors used Wav2CLIP to describe 5 second audio chunks to the LLM for additional activity summarization. Hearing footsteps, music, or conversation can vastly affect predicted activities and experiential history.

The results

This constant questioning over a set experience builds a sort of world state history over time within the LM’s context window. Since videos can consist of so many frames, even chunking by time (such as 5 second chunks) results in an overflow of summaries surpassing available model context memory. To combat this, LMs are used to routinely summarize progress so far. For particularly long subsets of video (for example, a day-long first person recording), video search and summarization search is used to isolate key moments relevant to the question posed. Example questions from the paper are “Did I drink coffee today?" or “Why was my wife laughing today?". Note that the LM summary is more useful than direct video search sometimes, as the video frames associated with the action may work for some questions (ie presence of a coffee mug answering the first question) but may miss the key moment to answer other questions, such as for our laughing wife example, video of a woman laughing misses the actual reason for the laughter.

Socratic models can answer complex questions and summarize long form egocentric video recordings of a day, but authors do note that a common failure mode is over-counting (ie you drank a singular coffee for several minutes, which the model may count as multiple separate coffees). Noticeably the models can answer forecasted questions as well, though predictions of what an individual would do given the experiential history is highly tied to the model’s biases. Or, in other words - if you live an average routine, the model is more accurate than someone with a more unique schedule.

Applications

The authors discuss applying the models across many domains, including robotics. In this case, a simulator of a robotic arm renders an overhead camera capturing the arm and table workspace. The table has a few blocks and bowls; the model was able to do long term task planning by prompting the LLM to prepare psuedocode based on scene understanding. Robotic control was broken down to a set of simplified instructions (robot.pick_and_place('yellow block', 'green bowl')) but doesn’t go into any more complicated control than this. It is also worth noting that were some issues with existing vision models correctly working with the simulated blocks, given the unrealistic look of the simulated environment.

LLMs and Robotics

Now that we’ve explored prompting and call strategies for task decomposition and self reflection, and explored several agents demonstrating levels of state understanding and multi-modal uses let’s look at papers that specifically look at methods and applications of LLMs for use in robotics.

We’re going to be looking at the following papers:

Again, this list isn’t comprehensive - it’s some of the better papers I read or that I felt covered must-know techniques. There are many papers of varying quality exploring the space, and I suspect there will be many more in short order.

Code generation and multi agents

A note before we dive in. A number of these papers suggest high level code generation for a form of task planning; some even doing recursive generation of increasingly complex code. That would imply there would be heavy overlap in some of the headline catching projects that are promising to replace programmers, producing entire applications and products in a matter of minutes.

After looking into it, I decided I want to do a write up on some of these entries, most of which have no real research papers associated with them, just open source projects. This is a deep rabbit hole on its own, and I’ve elected to skip them for now. The primary driver of this decision is that, without associated benchmarking paper, I’ll have to actually try each of these in order to comment on actual effectiveness of their techniques.

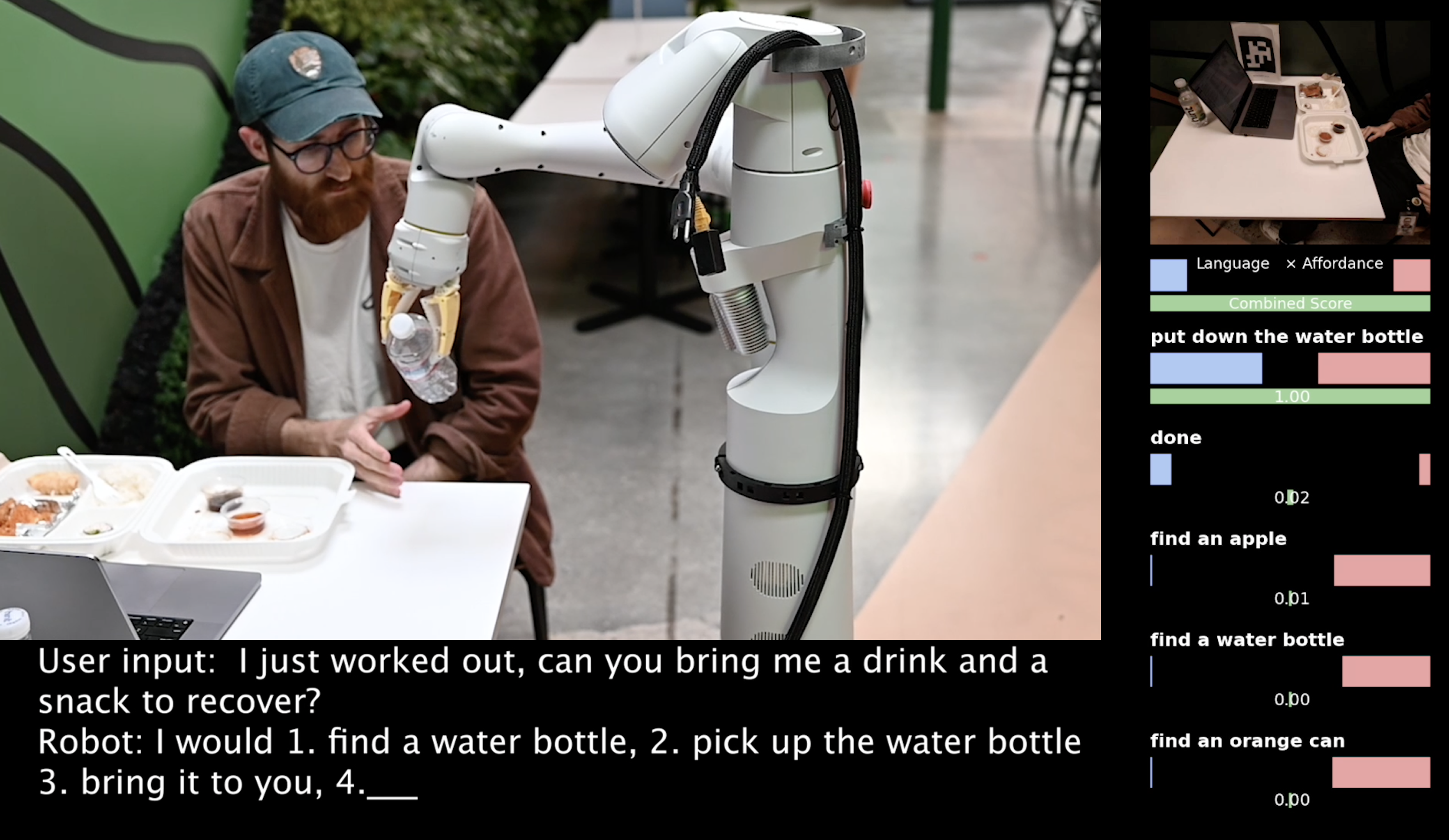

SayCan

SayCan [paper] [site] [code] [demo] is easily the most referenced paper of the group, as it was an early (April of 2022, practically ancient for this topic) work from Google’s Robotics at Google and the unfortunately now defunct Everyday Robotics (it wasn’t a part of ads, so it died at the whim of an executive, a story as old as time).

SayCan’s primarily focus was to address grounding LLM responses to a set response. An ungrounded solution an LLM could suggest is one that simply can’t be carried out due to the capabilities of our embodied agent (the robot) or the state of the world. For example; if we tell an LLM that we have spilled a drink, it may suggest we get a mop to clean the spill. A valid idea, if it weren’t for the lack of a mop in the immediate environment. Similarly, imagine an LLM telling a robot to fly to a location when it is a wheeled robot; not an ideal result.

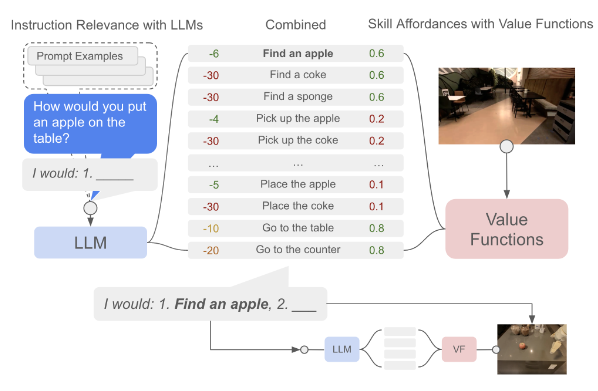

SayCan’s solution is to train a new network that combines a frozen language model to produce embeddings to describe a recommended action for the robot in the form of text, combine that input with the current state of the robot and its vision sensor, and determine the viability of that action working. To do this, the authors created affordance functions (representations of how likely the robot can accomplish the suggested task).

Affordance Functions

To train these affordance functions, SayCan utilizes temporal difference (TD) based methods to define a Markov decision process (MDP) to train a value function to estimate value of a given suggested action. Since they utilized a sparse reward case (1.0 on successful completion of a task, 0.0 on failure), the value function becomes our affordance function.

Author’s note - if these reinforcement learning terms sounds interesting but also like a bit of gibberish, I have some recommended reading for you. Reinforcement Learning 2nd Edition is an excellent free textbook that covers the topic extensively. If you want a less dry, less mathematically intensive introduction I also can recommend Grokking Deep Reinforcement Learning.

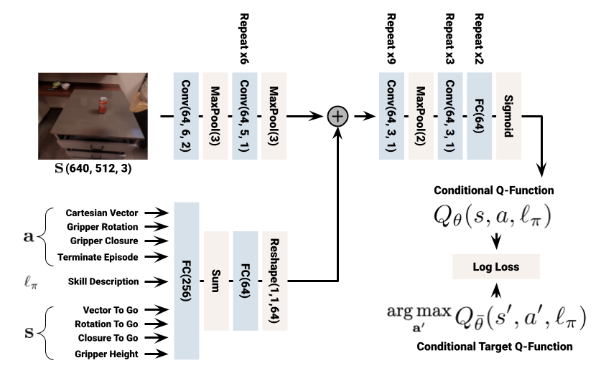

The value function model consists of a frozen language model to convert the instructions into a set of embeddings, which in turn feeds that result into a neural network with the available image and robot state. This is propagated through a network to infer the value evaluation.

Note that there were multiple value functions, each for a set skill. Grasping (“pick up a can") is a different skill than find, which is a different skill then open drawer, and so on. When SayCan attempted to find the affordance of a given task it would use the correctly associated function to the skill. This approach was used such that SayCan robots could have their capabilities expanded with new skills by simply introducing the LLM to it via prompt examples, and then providing a trained affordance producing value function.

SayCan in action

SayCan powered robots follow this process when operating:

- Receive a human command in natural language (via voice or text)

- Use a few-shot approach to prompt the LLM into suggesting a course of action for the given command. Do this multiple times to produce many possible courses of action. (Say)

- For each generated suggested action, feed the current state of the robot, vision frame, and text into the value function network. This creates our affordance score. (Can)

- Select the action with a combined highest affordance value and relevance to the requested task and attempting executing it.

- Throughout the task, determine if the course of action needs to be adjusted based on new information, failures, or outside influences.

Language models

The authors trained multiple SayCan models in order to test through ablation the performance based on several factors of the model. They used the PaLM and FLAN models; specifically the 8B (billion), 62B, and 540B parameter PaLM models and the 137B FLAN models. Despite FLAN being fine tuned for instruction, PaLM seemed to outperform it. The authors suggested this was due to the broader dataset. Model size also seemed to suggest better performance, but it’s unclear how much it causes effects in performance. These models are used with few-shot prompting and chain of thought to generated the suggested actions to move the robot towards solving the human request.

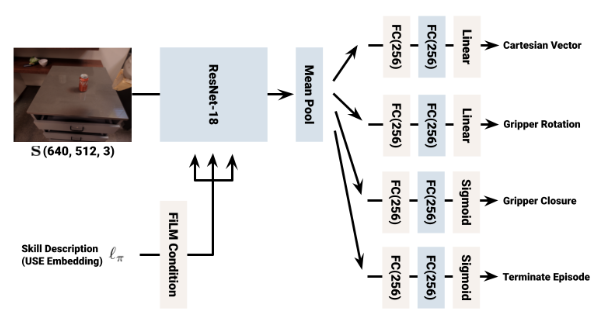

Skills

Skills were treated as stand alone functions that operated based on a language input and the state of the robot. Skills were trained with either behavioral cloning via BC-Z [paper] [site] or reinforcement learning with MT-OPT [paper] [site].

Behavioral cloned skills were created using a dataset of 68,000 demonstrated skills. The collection of the dataset took 11 months on 10 robots; operators utilized VR goggles and controllers to control either the real robots or a simulated environment, performing the task as instructed.

The BC-Z architecture was modified from its original design to also support the generated task embeddings as input, and the ending fully connected layers adapted to the designated robot.

The MT-OPT architecture matched the original paper save the addition of the generated embeddings as well. To “smooth” operation, an asynchronous approach towards inference and action was utilized, wherein the inference for the next action’s move would be performed while still completing the prior task. Thus an additional input was concatenated to the current robot state; the percentage completed of the prior task.

Both BC-Z and MT-OPT approaches utilized RetinaGAN [paper] [site]; a GAN specifically for converting the unrealistic simulator views to more realistic imagery. This has been show to drastically improve performance in moving networks trained in simulators to real robots (aka sim-to-real transfer).

The BC-Z trained skills did work, but was routinely outperformed by MT-OPT trained skills.

Note that both of these approaches were very costly to produce and train. There was a massive time and labor upfront cost to produce the behavioral cloning dataset. The MT-OPT model took for 100 hours of TPU training time along with a pool of 3,000 worker nodes to collect episode data, and another 3,000 worker nodes to calculate target Q-values. It is unlikely we’ll see similar approaches taken by smaller labs or individual DIY roboticists like myself.

Inner Monologues

Inner Monologues [paper] [site] proposes an approach of embodied reasoning planning, specifically using a frozen LLM model with incorporated environmental feedback to allow better control in robotic environments. The hope is to figure out an approach on how to augment LLMs to handle control of robotic agents in complex tasks without fine tuning or significant changes requiring retraining.

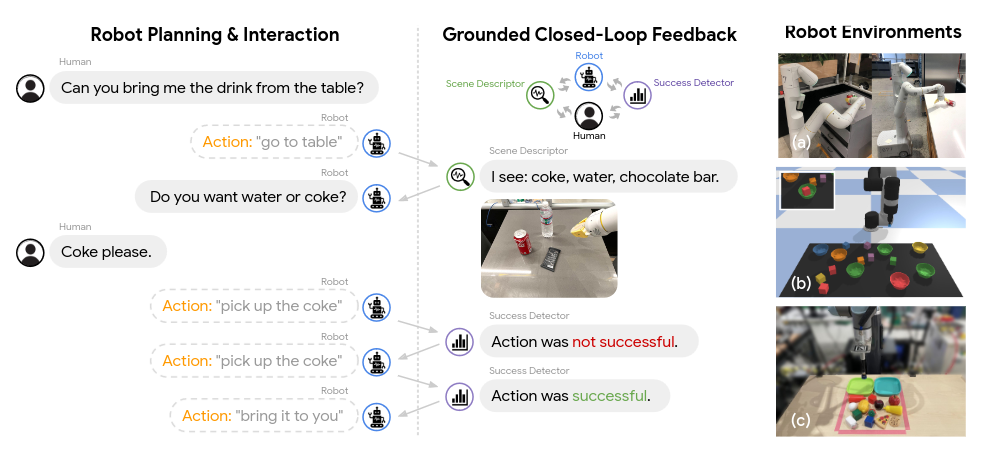

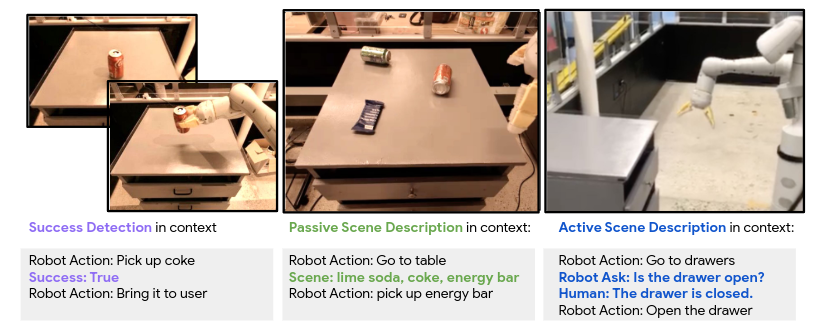

Feedback is categorized as three types; success detection, passive scene description, and active scene description. Success detection is self explanatory - letting the LLM know if a chosen action was successful or not, defined by whatever the external tool or function requires. Passive and active scene description both describe what’s occurring within the scene (using a Vision Language Model (VLM), Vision Question Answering (VQA) model, or just simple object detectors to convert image data to text descriptions). Where they differ is passive scene detection encompasses constant feedback that ground the LLM in a scene, while active scene description encompasses all feedback provided in response to an LLM query. An example - a scene description of a desk with objects is passive. If the LLM asks for additional information about the items on the table, or the state of an object in memory, active feedback is returned often in the form of an unstructured response to the query.

The collected feedback is injected into the context window of the LLM, allowing it generate a set of actionable steps in response to human instruction. These actionable steps are then given to external modules to execute, which in this paper was trained either using reinforcement learning or behavioral cloning. The LLM is primed to approach each domain through constructed few shot prompts. Similar to ReAct, the examples prompt the model to follow the pattern of Robot Thought: and Robot Action:, injected with resulting Scene: descriptions.

Authors utilized real and simulated robotic arms and platforms for several manipulation tasks and long-horizon manipulation/navigation tasks. Even with adversarial disturbances (as is tradition in cutting edge robotics, defined as an intern with a stick screwing up the robot) the approach proved resilient and could replan when appropriate.

The model was able to communicate with humans for direct input, or interrupted changing of tasks, and demonstrated the ability to understand typically ambiguous command phrasing (“Ignore this task and do the previous task”). When blocked by an abstract obstacle, the model showed the ability to create alternative plans. An example from the paper was a block to be utilized to solve a task was made intentionally too heavy for the robotic arm, unbeknownst to the model’s context; repeated failures caused the model to suggest “finding a lighter block”. Finally, as a benefit of LLM’s wide net approach for training data, the model also demonstrated multilingual support when interacting with humans, even demonstrating the translation of emojis to instructed action.

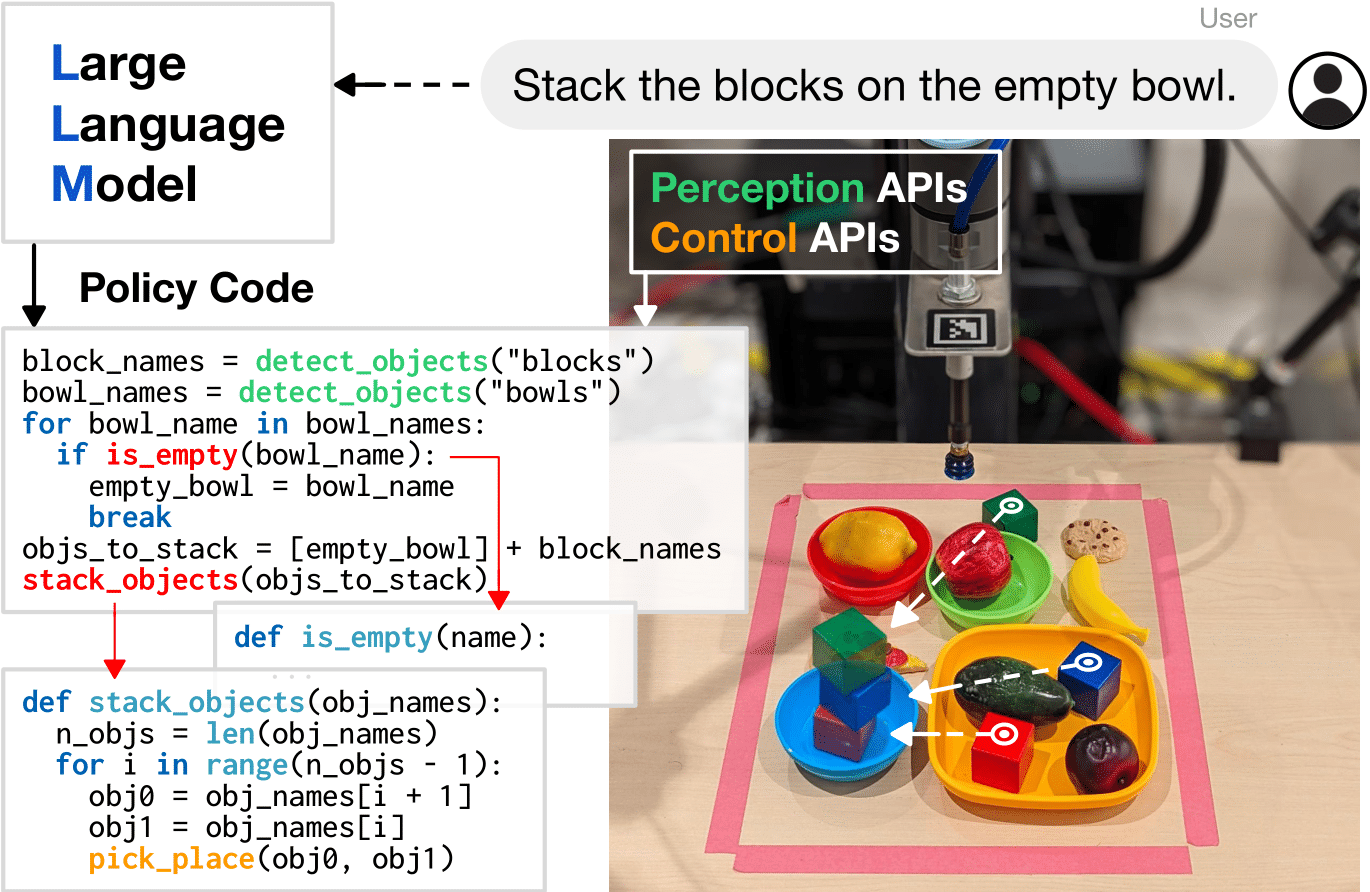

Code As Policies

Code as Policies [paper] [site] [code] (CaP) had authors note that LLMs have particular skill at code generation, and demonstrated some temporal and geospatial reasoning. Could a framework be created to allow them to generate policy code for robotic control?

Yes. The answer was yes.

The authors define LMPs (Language Model generated Programs) as any set of code generators powered by an LLM and executed. CaP utilizes different LMP agents to create its policy code for robotics control. User input (ie stack the blocks in the empty bowl) is added as Python comments. CaP uses few-shot techniques, providing a few key examples, to then generate code. If a complex action is required or expected by the LLM, examples provided lead the LLM to reference functions with generic, predictable names.

Before executing the code, the code is parsed into a syntax tree. If undefined functions are detected, an LMP oriented towards function creation is called to generate more code to define and create the expected behavior for it. In short - the first LMP can generate a function name such as get_abs_diff_between_means, which is then on the next iteration populated with code to get means and find the absolute value of the difference between them. If these functions similarly define a yet-to-be-defined function, then the next iteration will define it, with existing functions staying in the LLM’s contextual scope.

LMPs can also utilize third party and first party libraries if meaningful examples are provided, so it’s easy to slot in domain specific wrapper code for adapting to more complex applications or porting to new robotic platforms.

The paper is light on actual programming details, but the GitHub repository contains several full example prompts utilized in the paper, so check there for examples.

Domain specific prompt engineering and setup is required, CaP acting as more of a guiding framework than any specific implementation. It assumes that the robot has some existing system to take note of the surrounding environment through some suite of sensors and/or perception models and convert its findings to a text based state.

Where Code as Policies suffers is from long horizon planning, temporal reasoning, and difficult spatial reasoning. Hallucinations still do occur at times, though the authors applied Chain of Thoughts and other techniques to reduce this.

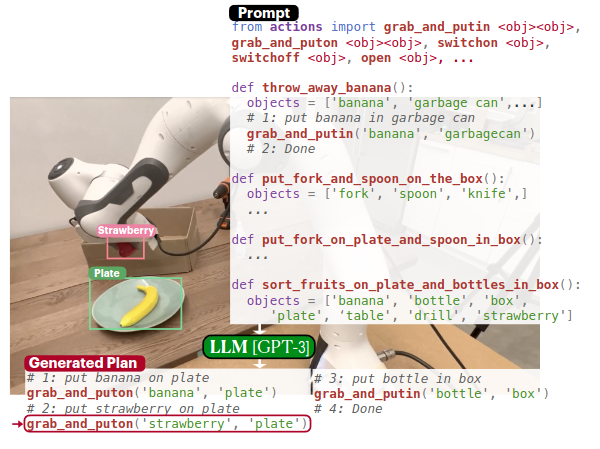

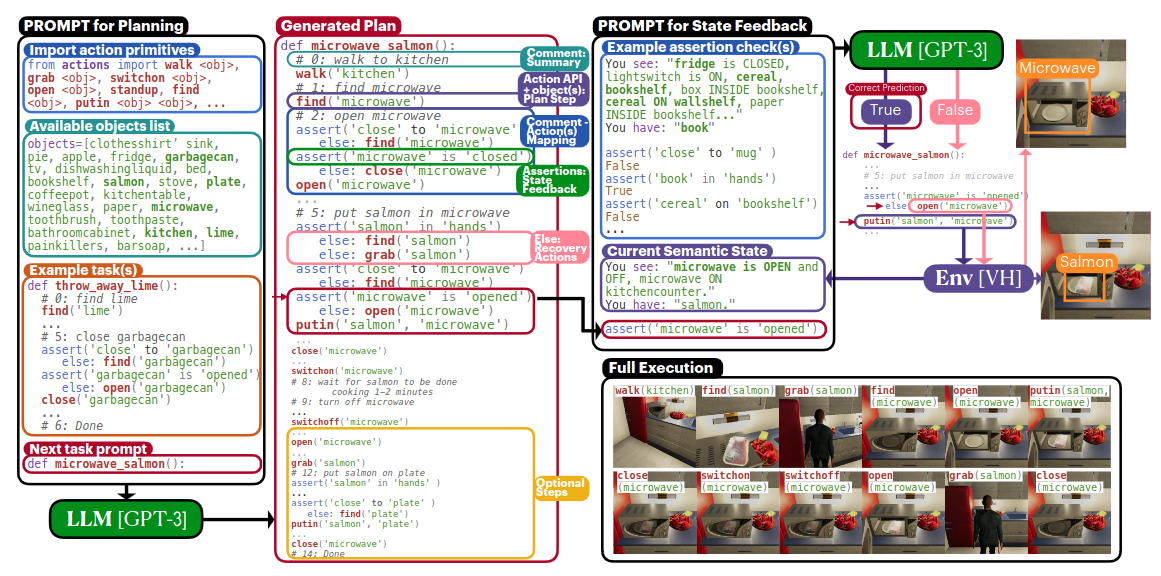

ProgPrompt

ProgPrompt [paper] [site] [code] discussed a programmatic prompt approach to facilitate plan generation across different environments and robotic applications. ProgPrompt is similar to Code as Policies in its generation of Python code for robotic control through few shot examples.

ProgPrompt tries to generate a long term plan off of human input in the form of code, breaking down commands like “put the salmon in the microwave” to create API calls like find(salmon). Comments in the code provide natural language summaries of sequences of actions in the form of procedural code. First the task is broken down into sub tasks in natural language (our previous example becoming “find salmon” “grab salmon” and “put salmon in microwave” as logical steps). assert is utilized in code generation to determine success of a particular action, acting as environmental feedback during execution. An example of our generated salmon example code:

def put_salmon_in_microwave():

# 1: grab salmon

assert('close' to 'salmon')

else: find('salmon')

grab('salmon')

# 2: put salmon in microwave

assert('salmon' in 'hands' )

else: find('salmon')

else: grab('salmon')

assert('close' to 'microwave' )

else: find('microwave')

assert('microwave' is 'opened')

else: open('microwave')

putin('salmon', 'microwave')

State is injected into the prompt during code generation by inserting the available objects in the environment as a list of strings. The prompting scheme similarly lists with few shot examples the set of available functions for the robotic platform.

Researchers utilized ablation studies in ProgPrompt to identify crucial components - specifically, both the feedback and comment generation dramatically improved performance across their chosen benchmarks. The assert usage was unfortunately based on the simulator’s omniscience of the environment; the feedback mechanic was not utilized in their real world examples due to the lack of a reliable tracking system for environment state. State tracking is clearly important for LLM integration to robotics, and ProgPrompt focuses more on programmatic generation of planing than full integration solutions.

Statler

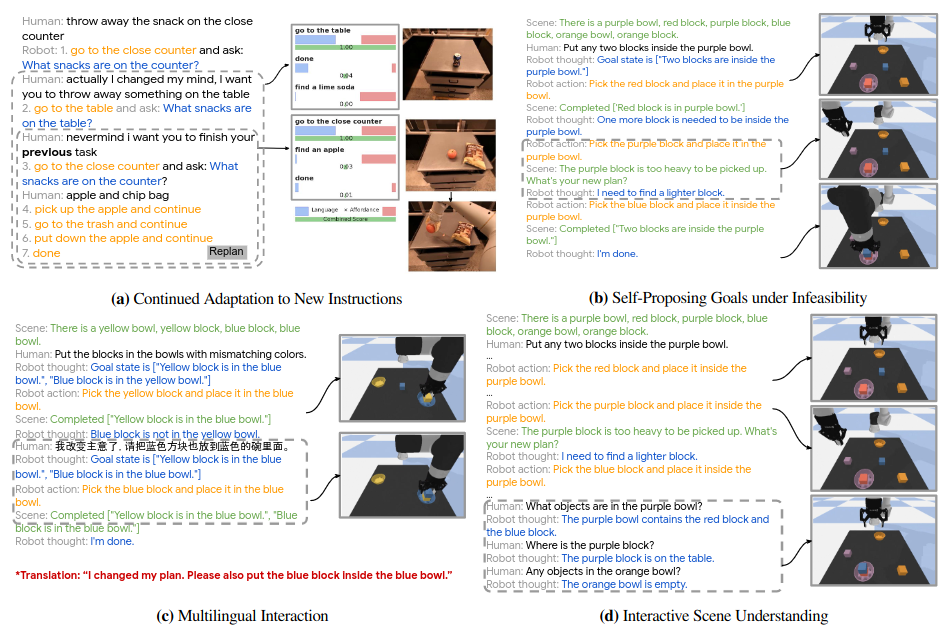

Statler [paper] [site] [code] (STATe-maintaing Language models for Embodied Reasoning; yes it’s a stretch of an acronym) utilizes LLMs for embodied reasoning for state management. Succinctly, Statler proposes a method to create a representation of the world in text that acts as an injectable memory and maintained over time entirely through LLM interaction, allowing long horizon task planning for robotic agents.

Statler creates a world-model reader and a world-model writer agent for state management. The world-model reader interfaces with the world model (state within a datastore), and based on questions posed to it by a task planner returns a set of answers. The world-model writer is responsible for predicting the resulting world state given the current world state and a query given by the reader.

For a simple explanation, imagine this task - an LLM controlled game of shells/cups/three card monte (its name is apparently different throughout the world). You have three cups, one covering a trinket we wish to track.

cups = [False, True, False]

If we were to ask a vanilla LLM to swap a set of cups a few times, we have a high rate of failure, where it can’t track what would have changed with each action, resulting in unknown indeterminate states. As the actions become more complex or numerous, the rate of failure would raise.

cups = [False, True, False]

Swapping cup 1 with cup 2

Swapping cup 0 with cup 2

Swapping cup 1 with cup 2

cups = [False, False, True]

Note the above was generated with an LLM and is incorrect; the first cup should be where the trinket lies.

We’ve seen how forcing LLMs to generate reasoning as it generates answers results in better performance due to the reasoning being present in context during generation of the tokens representing our answer. The same is true if the desire state is tracked and injected into the LLM during the operation. The same example again, with modified state injected:

cups = [False, True, False]

Swapping cup 1 with cup 2

cups = [False, False, True]

Swapping cup 0 with cup 2

cups = [True, False, False]

Swapping cup 1 with cup 2

cups = [True, False, False]

An LLM generated the above, and sure enough - we see the state is correct. Statler plays off of this idea for several complex environments. I must note though that the authors admit that a custom implementation of the world design - how we approach representing the state and how actions modify it - must be defined for each unique application, so a domain specific approach is necessary.

Authors of Statler propose utilizing a few-shot approach for each set of expected actions. In the paper’s robotic example, it utilized an LLM controlled robotic arm with egocentric video moving colored blocks into colored bowls. Blocks could also be “infected” or “disinfected” by touching other “dirty” blocks or being placed into the “disinfector” bowl respectively. Thus an example use of the world-model writer:

state = {

"objects": ["cyan block", "yellow block", "brown block", "purple block",

"blue block", "green bowl", "red bowl", "disinfector"],

"relations": [],

"disinfector": {"contains": []},

"cyan block": {"is": ["dirty"]},

"yellow block": {"is": ["clean"]},

"brown block": {"is": ["clean"]},

"purple block": {"is": ["dirty"]},

"blue block": {"is": ["clean"]},

"green bowl": {},

"red bowl": {}

}

query: Put the cyan block on the yellow block.

…our world-model reader would respond to the query:

put_first_on_second("cyan block", "yellow block")

update_wm("Put the cyan block on the yellow block")

…whereas our world-model writer would adjust the state given the query, creating:

state = {

"objects": ["cyan block", "yellow block", "brown block", "purple block",

"blue block", "green bowl", "red bowl", "disinfector"],

"relations": [["cyan block is on yellow block"]],

"disinfector": {"contains": []},

"cyan block": {"is": ["dirty"]},

"yellow block": {"is": ["dirty"]},

"brown block": {"is": ["clean"]},

"purple block": {"is": ["dirty"]},

"blue block": {"is": ["clean"]},

"green bowl": {},

"red bowl": {},

}

If we had executed multiple actions as our generated code from the world-model reader, then we would have multiple updates, executing the expected state change for each update. Similarly, we can control and inject few-shot examples based on the action being taken for the state.

The authors also demonstrated additional reasoning through the state. shown when given additional meta information about the blocks, such as memorizing and reasoning over relative block weights (“the red block is twice the weight of the bronze block”) and used to try abstract problem solving such as “put the blocks in the purple bowl so that their total weight becomes identical to what is in the gray bowl”.

Authors compared performance to Code-as-Policies, even suggesting it as an extension of Code-as-Policies directly. They noted better performance in multi-step task plans and temporal reasoning tasks.

Statler had two notable fail states; the first was hallucinations, a common issue we’re seeing throughout applications. The second was ambiguity in terminology on requests. Terms like “other” when referring to objects relative to one another would have a higher probability of failure.

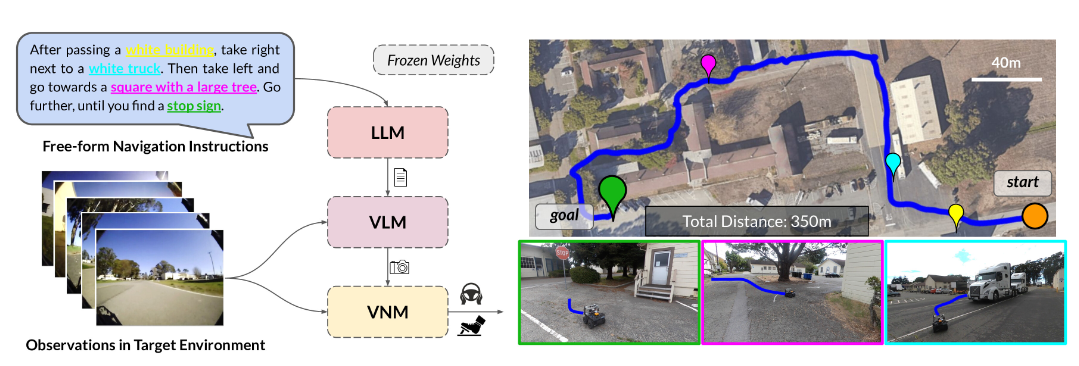

LM-Nav

LM-Nav [paper] [site] [video] sought to combine pre-trained models to create a “larger than the sum of its parts” solution for robot navigation. Researchers combined off the shelf LLMs (GPT3.5) with existing vision language models (VLM) and existing visual navigation models (VNM). The goal was to allow human language as an interface for navigational guidance for a robot. Or, in other words, convert “take a left at the fire hydrant” into actionable robotic navigation.

We’ve discussed VLM’s already within this work; they are models that combine language labels with images, allowing the model to create descriptions or answer queries about the content. The authors used CLIP [paper] [site] [code] (Contrastive Language-Image Pre-Training) from OpenAI to act as their VLM for LM-Nav. CLIP converts images into an embedding space that allows it to determine how likely a string is to be associated with a given image.

The LLM is asked via few-shot prompting what the key landmarks, in order, based on the provided human guidance. Thus:

Go towards a park bench, take a left at the stop sign. Go towards a blue dumpster, take a left and stop at the blue truck.

…has the following isolated landmarks as a response.

1. Picnic bench

2. Stop sign

3. Blue dumpster

4. Blue truck

We then have the VNM; these models allow robots to navigate in environments using only camera input to generate a sequence of actons to move the robot from its current position to a goal pose. The authors used the ViNG [paper] [site] model to tell us temporal distances between pairs of images in corresponding actions to execution. It’s used here to serve two purposes:

- Given a set of our robot’s observations, create distance predictions to create a topological graph to create a “mental map” of the environment.

- Given a sequence of connected sub-goals to the goal node (such as our landmarks), navigate the robot along that path.

All together - the LLM converts our human instructions to a set of landmarks, paired with the VLM to find associated images with the parsed landmarks. Finally, the VNM decides how to effectively move between these waypoints.

The outcome of LM-Nav was a robot that, given rough directions from a human like a local at the gas station before smartphones, can reach a designated goal. This was also notable for accomplishing this without having humans annotate data or retraining any model, just using off the shelf models for an easier integrated multi-modal approach.

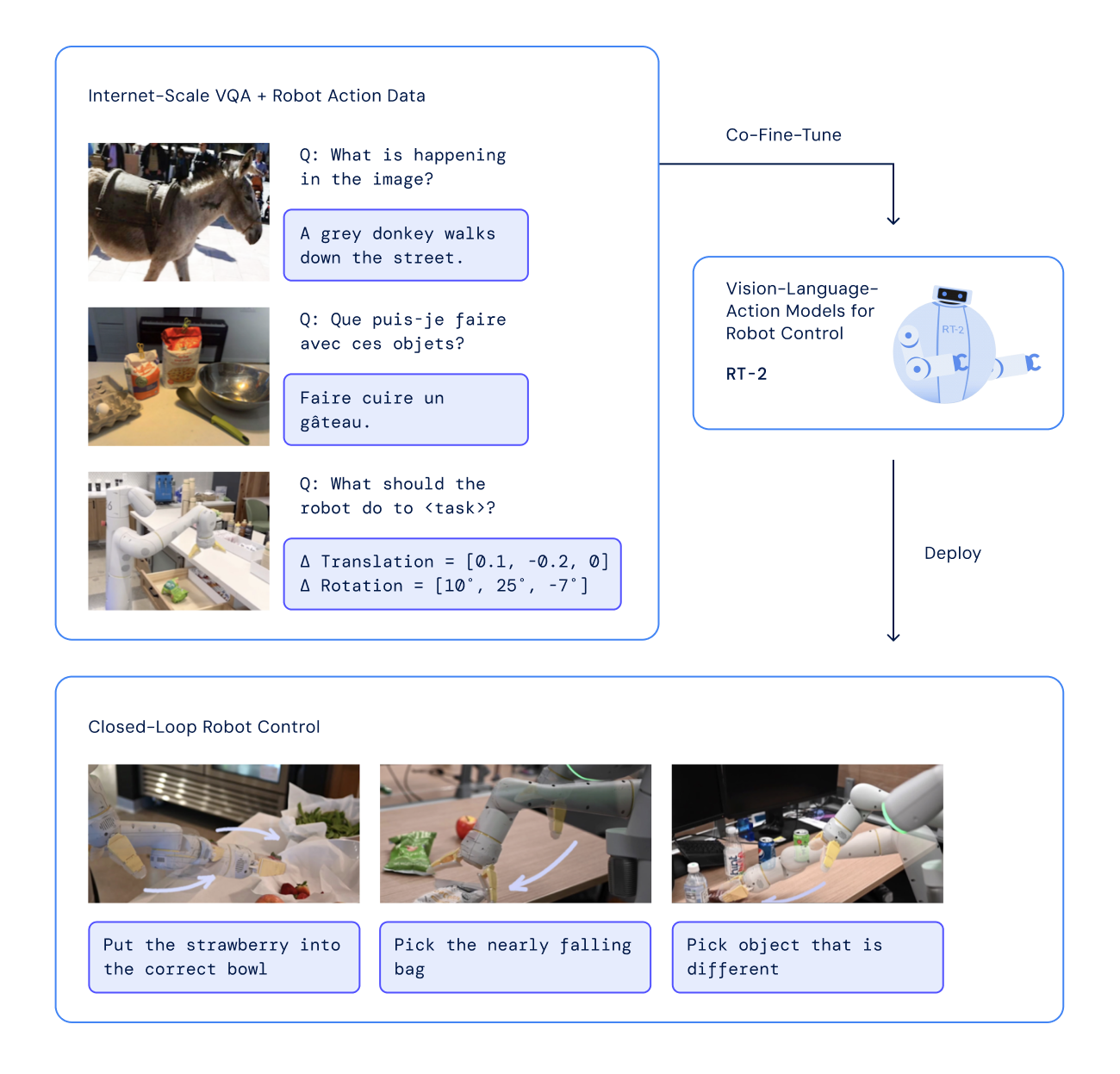

RT2

RT2 [paper] [site] was a followup paper improving on RT1 [paper] [site] from (once again) defunct Everyday Robotics. Standing for Robotic Transformers, RT2 explored using transformers to create a Vision Language Action (VLA) model. RT2 will, given natural language commands, robotic state, and vision, have a singular combined model output direct robotic control. While other approaches we look at address mainly higher level planning for robotic tasks, RT2 provides an end to end sensoriomotor control solution.

Building a VLA

Researchers built off of the existing vision language models (VLM) - PaLI-X and PaLM-E [paper] [site]. Both models are trained across web-scale datasets to convert input image scenes to text descriptions. For PaLI-X researchers built off of the 5B and 55B parameter models, and for PaLM-E a 12B version was created.

The researchers collected a set of robot demonstration data over 17 months with 13 robots in an office kitchen environment; the same room and robots utilized for the SayCan paper. In fact, SayCan’s 68,000 demonstrations were added to this dataset. Each demonstration consists of a natural language instruction annotating a demonstration of a skill (pick, open, place into, etc) and one or more nouns for the objects manipulated (7up can, drawer, napkin, etc). All hyperparameters and training regularizations and schedules are adopted from the original VLMs papers.

The action space (the set of possible outputs needed to express a state of movement in the robot) consists) of the 6 degree of freedom arm, plus positional and rotational displacements of the end effector, gripper extension, and a discrete command for episode termination (to signal successful completion of the task). Discretizing the space (as opposed to a continuous output which I discuss in my PPO robotic arm project) gives us 256 uniform bins. Researchers associated each bin with existing tokenizations. For PaLI-X, integers up to 1000 each have unique tokens, so they associated the action bins to its corresponding integer. For PaLM-E model, researchers overwrote the 256 least used tokens to create an “action vocabulary”.

The model is then fine tuned across the robot dataset and the original VLM dataset consisting of question and answers about labeled images. When generating output for the original dataset, the VLA acts as a normal VLM. For the robotic actions, researchers constrained output to just the 256 expected action tokens for execution on a real or simulated robot. Over time, the ratio of robot data to original web data is skewed more towards robotic data.

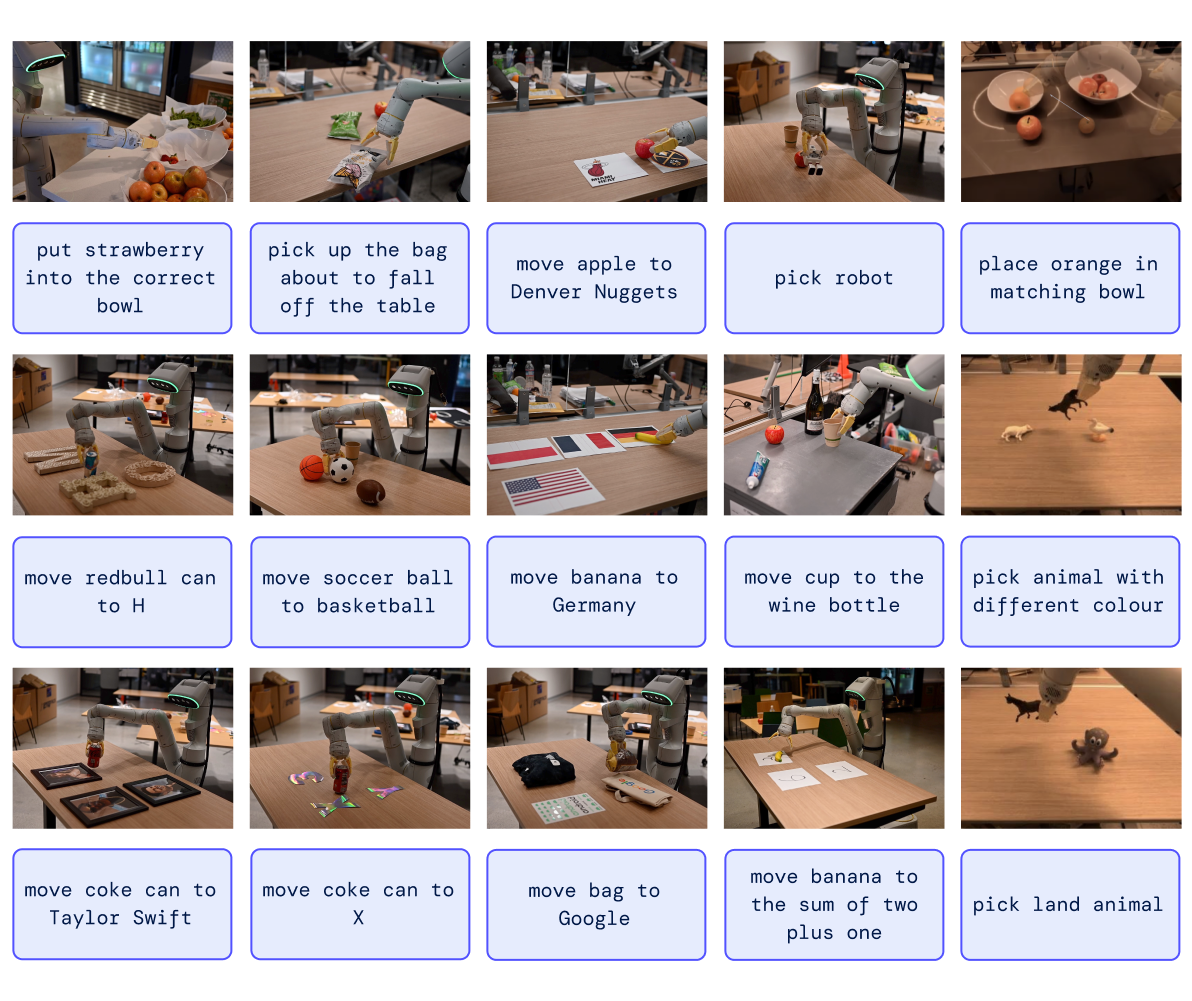

Results

The associated project website provides several videos of RT2 powered robots in action.

RT2 demonstrated several impressive capabilities. Powered by a singular neural network, robots could receive a human’s command in natural language, its vision, and current state, outputting direct motor control to perform the task. RT2 demonstrated the ability to get drinks for a person, clean up messes, or perform simple fetch and placement tasks in kitchens, including manipulating drawers. The ability to demonstrate proficiency in tasks in a human environment is no small feat.

Researchers also applied chain of thought style prompting, having the vision aspect of the model produce an additional plan step in its output. The prompted plan would describe the purpose of the action the robot is about to take in natural language prior to outputting its action tokens. Researchers observed a noticeable improvement in performance for complicated tasks when the VLA did this.

Will we see RT2 in the wild?

As it stands, unlikely. RT2 suffers in three regards for likely adoption.

The first is cost; RT2 takes significant time and effort to create a reinforcement model for each of the desired possible actions of the robots. It took months of significant effort with expensive robotic platforms to create a dataset of skills.

Additionally, RT2 is not flexible towards adopting new capabilities. Demonstrations need to be created, annotated data collated, and the model retrained. Any significant change; new skills, domain switch, reasonably different environments, or hardware changes on the robot; trigger the need for retraining. It is possible that transfer learning could lower the barrier, but it still requires significant hardware to train the model.

Finally, RT2 requires significant hardware to run inference on. The researchers themselves admit that the model is larger than any they could find prior by over an order of magnitude. The hardware is restrictive to robotic applications - power, cooling, and size requirements making it a poor fit for a mobile platform. The researchers used a multi-TPU cloud service that handled robot queries over a network connection to control the robots. The 5B parameter model ran at a frequency of around 5Hz, whereas the largest model, the 55B parameter model ran at approximately 1-3Hz. While the results are impressive, the robots were slow. This also prevents any high-frequency control applications from being a suitable fit.