Repeatable Dev Environments for ROS2

tldr

ROS/ROS2 environments are notoriously annoying to get into a repeatable, isolated dev environment. I write about my initial look into this problem, and some proposed solutions I tried. I present my imperfect solution using both Docker and VMs to wrap my whole dev environment with… moderate success.

The Why

ROS2 (I’ll mostly focus on ROS2 in this post, but some of what I discuss here is applicable to a ROS environment as well) assumes that you’ll be dedicating the entirety of the given system to ROS. Its install methods utilize a set of installable debian packages on top of their custom build system. This makes sense; ROS is for robotics, and low level C code is the only way to get the performance required (well, Rust, but that’s still undergoing adoption in the robotics space and there’s a lot of legacy C code). The problem is the dev’s machine - it’s a pain to have multiple versions or experiment rapidly.

My background in software was originally on the web tech side, wherein projects are expected to designate their requirements in a singular expected place (requirements.txt, package.json, go.mod, on and on). Additionally we expect to work on multiple projects at once, sometimes with vastly different versions of the language or dependencies. For Python we have virtualenv and pyenv (as an aside, I hate Python versioning, but it works and I won’t go off on a rant), node expects all packages ot be self contained (and has nvm or similar tooling), and go is isolated to the project folder level with gvm for versioning the language.

This is invaluable; developers can be far more productive if the tooling allows them to quickly get up to speed, clone environments for testing, and the ability to freely experiment with work and upgrades without losing hours to environment setup. Fast and deployable repeatable environments make life so much easier.

I’ve come to expect having environments clearly defined in a software project; from expected language version to dependencies utilized, and have that project isolated to its own environment without side effects. This is just not possible with ROS2 projects without some trickery.

I’m working on a project now with others where the others in the project are not as experienced in software engineering, and I’m trying to make it as easy as possible for them to get started. Similarly, I don’t want to have to spend my time hunting down differences in our environments when debugging, or having to worry about problems installing and configuring environments. If I could just provide everyone with a set of tools that will prepare a cloned environment rapidly it’ll smooth over so much of the project.

Thus began my quest to figure out some pattern of approaching ROS projects that met the idea of isolated repeatable environments. Is this the best solution? I sure as hell hope not; while this works, I feel like it’s not smooth enough to be the way. I’m hoping to either refine it or be dumbfounded when someone points out an easier approach that I somehow missed. That being said, maybe someone out there will find this useful, so… here we go.

The Goal

Our goal in one sentence: easily repeatable self-contained ROS2 environments for rapid development; and make it easy so that my teammates can quickly get their environments up and running.

Or, put even more succinctly - avoid having to hear "…but it works on my machine" ever.

By the end of this, we’ll have:

- A Docker container setup

- A VM setup for GUI usage

- A command runner to simplify everything

- VS Code integration for “native”-esque development

Docker

Self contained isolate environments? Sounds like a pitch for Docker. Let’s take a look at what the landscape shows. OSRF (Open Source Robotics Foundation) maintains a ROS image that through tags encapsulates all major releases of ROS and ROS2. Note for this writing we’ll be looking at ROS2 Humble Hawksbill.

There are some totable tag additions to take note of are the version names:

ros-corefor a minimal ROS installationros-basefor all basic tools and libraries (equivalent to LTS andlatestversions)ros1-bridgeincludes ROS1 bridge libraries to do a hybrid systemperceptionto include the ROS Perception libraries (note that this is ROS1)

You also have the desktop images here which include GUIed applications for ROS2 and a window manager.

You can also opt to install your own desktop environment and install ROS yourself for full customization. I’ll continue with this approach as it allows the most flexibility, but its trivial to swap out different pieces for what you want to do.

Here’s the example Dockerfile that I used for ROS2 Humble:

FROM ubuntu:jammy

# ==========================================

# 1. Installing ROS Humble

# ==========================================

# -------------

# 1.1 - Locale Setup

# -------------

RUN apt update

RUN apt install locales

RUN locale-gen en_US en_US.UTF-8

RUN update-locale LC_ALL=en_US.UTF-8 LANG=en_US.UTF-8

RUN export LANG=en_US.UTF-8

# -------------

# 1.2 - Add Additional Repositories

# -------------

RUN apt install -y software-properties-common

RUN add-apt-repository universe

RUN apt update

RUN apt install -y curl

RUN curl -sSL https://raw.githubusercontent.com/ros/rosdistro/master/ros.key -o /usr/share/keyrings/ros-archive-keyring.gpg

# RUN echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/ros-archive-keyring.gpg] http://packages.ros.org/ros2/ubuntu $(. /etc/os-release && echo $UBUNTU_CODENAME) main" | sudo tee /etc/apt/sources.list.d/ros2.list > /dev/null

RUN echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/ros-archive-keyring.gpg] http://packages.ros.org/ros2/ubuntu $(. /etc/os-release && echo $UBUNTU_CODENAME) main" | tee /etc/apt/sources.list.d/ros2.list > /dev/null

# Finally, refresh the package lists:

RUN apt update

# Recommended as ROS2 is tied tightly to ubuntu releases apparently

RUN apt upgrade -y

# -------------

# 1.3 - Install ROS2

# -------------

ENV LANG en_US.UTF-8

ENV DEBIAN_FRONTEND noninteractive

RUN apt install -y ros-humble-ros-core

RUN apt install -y python3-colcon-common-extensions

# -------------

# 1.4 - Source ROS

# -------------

# RUN echo 'source /opt/ros/humble/setup.bash' >> /home/vagrant/.bashrc

# ==========================================

# 2. Installing Navigation2

# ==========================================

# -------------

# 2.1 - Install Navigation 2

# -------------

RUN apt install -y ros-humble-navigation2

RUN apt install -y ros-humble-nav2-bringup

RUN apt install -y ros-humble-slam-toolbox

RUN apt install -y ros-humble-tf-transformations

# ==========================================

# 3. Installing other tools

# ==========================================

RUN curl -Lk 'https://code.visualstudio.com/sha/download?build=stable&os=cli-alpine-x64' --output vscode_cli.tar.gz

RUN tar -xf vscode_cli.tar.gz

RUN mv code /usr/local/bin/

COPY ./envs/ros_entrypoint.sh /

ENV ROS_DISTRO humble

ENTRYPOINT [ "/bin/bash", "/ros_entrypoint.sh" ]

CMD ["bash"]

X passthrough

Let’s talk about running GUI’ed applications on Docker. Docker can do it, but it’s a bit of a pain. The ROS wiki has a pretty good set of tutorials on the approaches that one can take to accomplish this. I got local X window forwarding working with some hacking around, but I will note that it was not exactly performant and regularly had rendering glitches that made the applications hard to use. This option doesn’t fly for a multi-OS team though, since it requires that the host machine is using an X server; so Mac and Windows users are out of luck.

It also suggests a VNC approach; I tried this as well with varying success. Performance was very much an issue in Gazebo, with an especially low framerate.

After a few days of experimenting with trying to maximize performance, I couldn’t get it to work reliably or easily enough to utilize. I had to start considering virtual machines.

VMs and vagrant

Years ago, before the Docker and container craze there was vagrant, a fun little tool that aimed to automate VM creation and configuration in a repeatable committable manner. By just typing vagrant up your project’s specified Vagrantfile would define all that you’d need to download, configure, and setup a VM uniquely for that project, or boot one if it was already setup. Combine with seamless file syncing between the VM and host, and easy SSH access for terminal access, it was a pretty nice tool. I just fell out of using it due to Docker.

Tangent: there’s also Packer, another tool that paired well with vagrant to create machine images as committable code. I don’t use it here, but it’s worth mentioning if you’re giving vagrant a look. Also it was one of my first open source libraries, which apparently some people still use even after ten years, which is always a great feeling.

There’s just one thing that VM’s do well that Docker can’t do without the hacks listed above - GUI’ed applications. With rviz, Gazebo, and other ROS applications providing a clear view into what’s happening being invaluable, it’s worth the effort of exploring the VM solution.

The Vagrantfile

We’re building off of the base Ubuntu image - specifically jammy. ROS2 is tied to specific versions of Ubuntu, so be sure to match them accordingly; since we’re using Humble, we’ll need jammy.

Below is an example of a Vagrantfile pulling Ubuntu, installing the Desktop for GUI applications, and installing and configuring ROS2 and nav2 for a simple setup. This can be easily expanded as needed. It assumes VirtualBox as the VM tooling.

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "ubuntu/jammy64"

config.vm.synced_folder "./ros_ws", "/home/vagrant/ros_ws"

config.vm.synced_folder "./.code-server", "/home/vagrant/.code_server"

config.vm.provider "virtualbox" do |vb|

# Display the VirtualBox GUI when booting the machine

vb.gui = true

vb.cpus = "4"

# Customize the amount of memory on the VM:

vb.memory = "16384"

vb.customize ["modifyvm", :id, "--graphicscontroller", "vmsvga"]

vb.customize ["modifyvm", :id, "--vram", "128"]

end

config.vm.provision "shell", inline: <<-SHELL

echo "==========================================\n"

echo "1. Initial OS + Ubuntu Desktop Setup\n"

echo "==========================================\n\n"

apt-get update

apt-get install -y ubuntu-desktop

apt-get install -y virtualbox-guest-dkms virtualbox-guest-utils virtualbox-guest-x11

echo "\n\n"

echo "==========================================\n"

echo "2. Installing ROS Humble\n"

echo "==========================================\n\n"

echo "\n\n"

echo "-------------\n"

echo "2.1 - Locale Setup\n"

echo "-------------\n\n"

apt update

apt install locales

locale-gen en_US en_US.UTF-8

update-locale LC_ALL=en_US.UTF-8 LANG=en_US.UTF-8

export LANG=en_US.UTF-8

echo "\n\n"

echo "-------------\n"

echo "2.2 - Add Additional Repositories\n"

echo "-------------\n\n"

apt install -y software-properties-common

add-apt-repository universe

apt update

apt install -y curl

curl -sSL https://raw.githubusercontent.com/ros/rosdistro/master/ros.key -o /usr/share/keyrings/ros-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/ros-archive-keyring.gpg] http://packages.ros.org/ros2/ubuntu $(. /etc/os-release && echo $UBUNTU_CODENAME) main" | sudo tee /etc/apt/sources.list.d/ros2.list > /dev/null

# Finally, refresh the package lists:

apt update

apt upgrade # Recommended as ROS2 is tied tightly to ubuntu releases apparently

echo "\n\n"

echo "-------------\n"

echo "2.3 - Install ROS2\n"

echo "-------------\n\n"

apt install -y ros-humble-desktop # Includes rviz + demos

echo "\n\n"

echo "-------------\n"

echo "2.4 - Source ROS\n"

echo "-------------\n\n"

echo 'source /opt/ros/humble/setup.bash' >> /home/vagrant/.bashrc

echo "\n\n"

echo "==========================================\n"

echo "3. Installing Navigation2\n"

echo "==========================================\n\n"

echo "\n\n"

echo "-------------\n"

echo "3.1 - Install Navigation 2\n"

echo "-------------\n\n"

apt install -y ros-humble-navigation2

apt install -y ros-humble-nav2-bringup

echo "\n\n"

echo "-------------\n"

echo "3.2 - Addenum - Install Cyclone DDS\n"

echo "-------------\n\n"

apt install -y ros-humble-rmw-cyclonedds-cpp

echo 'export RMW_IMPLEMENTATION=rmw_cyclonedds_cpp' >> /home/vagrant/.bashrc

apt install -y ros-humble-slam-toolbox

apt install -y ros-humble-tf-transformations

echo "\n\n"echo "\n\n"

echo "==========================================\n"

echo "4. Installing other tools\n"

echo "==========================================\n\n"

apt install -y python3-colcon-common-extensions

curl -Lk 'https://code.visualstudio.com/sha/download?build=stable&os=cli-alpine-x64' --output vscode_cli.tar.gz

tar -xf vscode_cli.tar.gz

mv code /usr/local/bin/

SHELL

end

Once this is set as your Vagrantfile, you only need to run vagrant up and wait for it to run. vagrant ssh will get you into the box and working locally with ROS2 CLI apps. Since we’re installing the desktop from scratch, a reset is necessary after first install to get the desktop running. Note that enabling 3d acceleration within VirtualBox helps a lot with Gazebo and the rest.

just do it

So far our setup is great, with a lot of environment setup handled for us. Unfortunately it’s still a pain to interact with some of these environments; specifically long commands with a lot of flags to remember and type each time we want to do something. Traditionally this would be handled by using makefiles and a bunch of .PHONY commands. Instead I’ve ome to appreciate another command-runner in just.

just has been great, acting as a really easy shared interface for interacting with your project on the command line. It neatly coordinates common actions and scripts without having to point people at docs all the time. It also supports language specific commands; so if it’s easier just to Python script something rather than do a bash one-liner, you can do it.

command:

#!/usr/bin/env python3

print('Hello from python!')

One wishlist item I’d want out of just? Support for nested commands, ie just command subcommand to make it neater. You can see my desired use of that in my naming scheme for just commands.

I built up a just file to handle all of the more complex commands for common tasks across the board for the project. A sample of the just file is below. We start with common python project commands:

# lint python project code

lint:

flake8 ros_ws/src

isort:

isort ros_ws/src

black:

black ros_ws/src

fix: isort black lint

Bring in the docker stuff:

docker-build:

docker build -t project-name .

docker-rm-image:

docker image rm -f project-name

docker-halt:

docker stop project-name

docker-rebuild: docker-rm-image docker-build

# docker ros2 core

docker-ros:

docker ps | grep project-name >/dev/null || \

docker run \

-it --rm \

--name project-name \

--mount type=bind,source=$(realpath .)/ros_ws,target=/ros_ws \

--mount type=bind,source=$(realpath .)/.code-server,target=/code-server \

--mount type=bind,source=$(realpath .)/envs/.bashrc,target=/root/.bashrc \

project-name \

bash

# docker bash shell into core ros2 server (if the core is not running, this will be the core)

docker-bash: docker-ros

docker exec -it project-name /bin/bash

Sharp readers might be wondering what docker ps | grep project-name >/dev/null || is; specifically, it’s checking for the existence of a docker container already running with the given name; if it does not detect it, then the next command is executed. Otherwise, it skips the command. This allows us to run the start command idempotently, allowing us to list it as a prerequisite for other commands such as the docker-bash command.

There’s also one noteworthy addition here - realpath. This is required specifically for Docker on Mac to get absolute paths for volume binding. You can install it on Mac using MacPorts.

Finally set up our VM:

vm-start:

vagrant up

vm-stop:

vagrant halt

vm-rebuild:

vagrant destroy -f

vagrant up

vm-bash: vm-start

vagrant ssh

Note to get the just file to output all the commands possible as a default, you can do at the top of your just file:

default:

just --list

Python Modules

Typically I’d run a virtual environment for a given Python project, and then would expect pip install -r requirements.txt or some equivalent to setup all the necessary modules within that virtual environment. We need something similar for our Docker and VM project environments too.

Added to our justfile for our Docker file:

docker-pip-install:

docker exec capstone-ros pip3 install -r /home/vagrant/ros_ws/src/requirements.txt

…and for the VM…

vm-pip-install: vm-start

vagrant ssh -c "pip3 install -r /home/vagrant/ros_ws/src/requirements.txt"

This assumes a singular Python environment for all executing ROS2 modules; I can see this becoming an issue in a more complex project where one could encounter conflicting dependency versions.

VS Code, autosuggest, and code servers

Now we enter the nitpicky. I like autosuggest. Sure it’s good to know the libraries and frameworks you’re working with well enough that you don’t need the autosuggest to show you what the attached functions or properties of an object is, but it’s still helpful. Hover-over documentation versus having to hunt down the docs is also a nicety I prefer to have.

The problem with our approach so far is that ROS2 installs its libraries, including its Python libraries, globally and doesn’t offer a pip installable version. Thus, we can’t have an isolated Python environment easily that allows our IDEs like VS Code to have autosuggest and hover-over documentation. We could run VS Code inside our VM, but then that’s an annoying UI hurdle to add, especially if we still primarily want to work on our host machine.

Well, it turns out VS Code has some fun remote code tunnel features built in; the UI can be separated from the backend, called the code server. Eagle eyed people might have noticed in the VM and containers I downloaded a code server to both:

curl -Lk 'https://code.visualstudio.com/sha/download?build=stable&os=cli-alpine-x64' --output vscode_cli.tar.gz

…as designated via this doc.

The plan - we’ll start a code server on the VM or docker container, try to give it some persistence (so a container restart or a VM rebuild won’t force us to set it up again), and try to get it to work like our desired local instance.

For persistence, you can specify where the code server stores its data via the --cli-data-dir flag. I’ve set it to a folder in my project called .code-server in the example, and set a placeholder file so git would note it. As long as we specify that folder and sync it to the VM/container, we should have persistence.

Once you have the code server on the container/VM, you can launch it with

code tunnel --name <project-name> --accept-server-license-terms --no-sleep --cli-data-dir <.code-server folder>

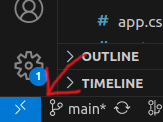

… with the flags being self explanatory. The name flag will allow you to easily access the code server from your Code. On your local/host machine, you can click the bottom left corner (the blue connection symbol) to now access the tunnel, or access the remote tunnel through your command palette.

If you’ve connected your VS code to GitHub for settings sync, then all of your preferred extensions, config options, and themes will get synced down as soon as you log into the code server. Yes, you’ll have to do that even with your host machine already being set up, as the extensions and settings live on the code server and not the IDE application.

With this we now have local code completion running with our local tooling. Let’s use just to make it a bit easier to run:

# docker vs code environment

docker-vs-code: docker-ros

docker exec -it project-name code tunnel \

--name project-name --accept-server-license-terms \

--cli-data-dir /code-server --no-sleep

and for VMs:

vm-vs-code: vm-start

vagrant ssh -c "code tunnel \

--name project-name \

--accept-server-license-terms \

--no-sleep \

--cli-data-dir /home/vagrant/.code_server"

All together

I threw all of this into a stand alone repository here, with a complete quick start guide for setting everything up.

Performance Review

So how did it handle my first major ROS2 project? I’d give it a C-. Let’s go through the problems I encountered and what I had to do to fix them. And yes, that one damned bug

Total graphical lockup

There is a bug I’m going to mention here that has been plaguing me; I’ve seen it across both my laptop and my desktop rigs and am hard-pressed to venture a guess on how to solve. If you’re running Gazebo, at some point the VM will simply freeze graphically. And I do mean graphically - the virtual screen will never update again, but you can ssh into the box and see that everything is running just fine; all processes will still report and chug along as expected; even stuff interacting with the Gazebo simulation. No programs report frozen, and killing Gazebo or any other intensive programs will not save you. As far as I can tell the only recourse is a complete reboot of the VM.

It’s annoying but I’m unsure what’s causing it, or if it’s only me that suffers it at the moment. I’ve experimented with raising memory, tracking resource utilization during it, and several roundabout ways to “unfreeze” the graphics driver but nothing seems to give way to some understanding. Strangely I never had this reported from any of the other teammates using this tooling, but I suspect most were not using the simulator in the VM.

Switching between environments

This was an oversight I didn’t encounter until I found myself working in Docker and suddenly wanted to swap over to the VM environment. The usernames and resulting userspaces/ids of who is building and thus own owns the resulting colcon build output is different in each environment; thrice over if you include your own host environment.

Thus; if you build in one environment, and swap to another, you’ll run into either:

- The linked libraries are in different spots as they’re based on the absolute path with the username, resulting in nothing working with out a rebuild, which leads to…

- Building fails because you don’t own the built files, requiring you to escalate to remove them and build from scratch anyway.

In either case, you don’t have the seamless simple switching you’d like to see jumping between them. Is this an edge case? I’m not so sure; my original proposed idea was that the Docker environment would be the target environment for most development wherein the VM could be utilized for only Gazebo/RViz/graphical applications, but maybe that concept was obtuse to start? The initial discovery of this bug did come from one of my teammates trying this.

I needed a quick fix; to this end, I tried to set the Docker environment to match the vagrant username that vagrant environments use by default, but this doesn’t solve the host environment issue. It’s also sinfully ugly.

# To make life easier, we are creating a vagrant user so it

# matches the VM so you can bounce between VM and Docker

# container easily

RUN useradd -m vagrant

RUN usermod -a -G root vagrant

RUN echo 'vagrant ALL=(ALL) NOPASSWD: ALL' >> /etc/sudoers

RUN mkdir -p /home/vagrant/ros_ws

A smarter approach would be to adopt the host machine’s username across all environments, but then you still have issues of absolute paths not matching. While I am able to match it here to be in the ~/ros_ws home directory, that’s not where I would keep the files locally, creating a mistmatch anyway.

VM Gazebo was a PITA to use

Even with all the acceleration enabled, and ignoring that one damned bug with the freezing, I still had issues utilizing Gazebo; once the environment started getting large and more graphically intense, performance dropped to sub 10 frames a second; not exactly ideal.

Is this useable then?

I think it needs work; I’ll try and iterate on the issues over time as I work on additional ROS2 projects. For now, I can say that it certainly sped up development for a number of my teammates, but I utlimately abandoned it once I started working more regularly with the simulator and had to quickly iterate on that. Even with all of the environment woes, several setups were still great; defining the virtual environments in a definable manner led to massive time savings, probably clearing out a lot of headache inducing bugs and cooperative debugging sessions. A lot of the build tooling was still super useful and could be repurposed for the local environment, and kept development moving at a decent pace.

I’m open to suggestions or other tools to try to solve this problem; for now I’ll call this a good experiment.