This past weekend I participated in the Replit Hackathon held by SDx. It was a fun event.

It may seem odd trying my hand at vibe coding, given its weird hype and counter culture built around it, and the fact that I am a skilled and experienced developer. I wanted to explore it, see what the tools and workflow was like, learn what is possible without my traditional approach to problems.

To that end my three person team worked on a fascinating concept. We called it adict - demonstrating that naming still remains one of the hardest aspects of some projects - an app that used profiles of simulacrums of people with varying backgrounds - gender, age, beliefs, region, job, income, education level, etc - and tasked AI with determinnig the mental and emotional response of that individual to a given ad. Yes, there’s lots of room for error there, but it’s a 4 hour hackathon, so bare with me there.

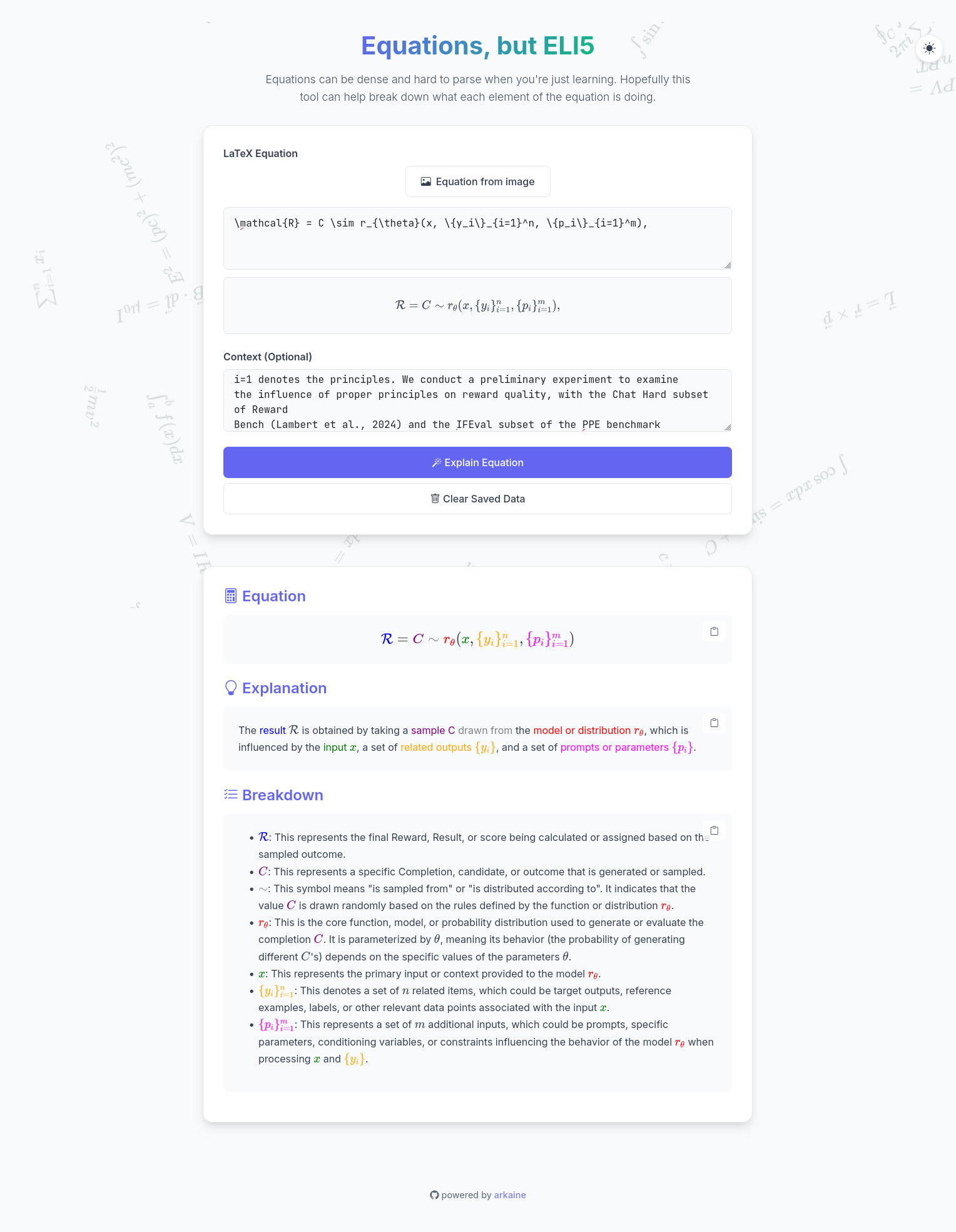

I used arkaine to build out the agent that would consume the ad, a profile, and then utilized Gemini 2.5 Pro to develop the response. It worked pretty well!

We did, however, have significant issue tying the backend to the frontend through Replit. A constant cycle of frontend errors that ultimately should have been dealt with manually ate too much of our time and resulted in a poor final product. But that’s ok - we learned a lot!

Replit seems to be awesome at getting a quick outline and mock up done, but then struggles with the more complex tasks. I’ve experimented with different workflows for it, but it does seem often circuitious to get certain bugs fixed. My own intuition on where the tool would struggle - edge cases that were not predictable or common for the unique piece of software you’re making - proved accurate.

That being said, I do like Replit. If, for nothing else, then quick prototypes and mockups. I think people underestimate how powerful that can be. Hell I think most people underestimate how simple most software solutions can (and should) be. Bespoke custom software isn’t a bad thing. It’s where everything we use now grew from. Maybe we’ll see a resurgence of in house tech solutions without the expensive overhead and billion subscriptions SaaS has devolved into.

I expect a fair amount of growth from Replit’s tools and I’m excited to keep an eye on it. I’m not as bullish as the hype cycle hucksters are, but I do think it’s a promising tool.

#vibe coding

#sdx

#replit